[AINews] Ten Commandments for Deploying Fine-Tuned Models • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

Open-Source AI Models and Advancements

Stability.ai (Stable Diffusion) Discord

Detailed by-Channel Summaries and Links

LLM Finetuning Workshop Highlights

LLM Finetuning Discussion on Jarvislab, Tokenizer Gotchas Shared, and Learning Model Accelerate

HuggingFace AI Community Highlights

AI Community Discussions

Interpreting AI Model Performance and Capabilities

LLM Studio Discussion Highlights

Modular (Mojo 🔥) Discussions

Latent Space AI General Chat

Tensorlake launches Indexify

Event Tracking and Channel Comments

AI Twitter Recap

AI Twitter Recap

- Anthropic's Claude AI and Interpretability Research

- Feature alteration in Claude AI: @AnthropicAI demonstrated how altering internal 'features' in their AI, Claude, could change its behavior, such as making it intensely focus on the Golden Gate Bridge. They released a limited-time 'Golden Gate Claude' to showcase this capability.

- Understanding how large language models work: @AnthropicAI expressed increased confidence in beginning to understand how large language models really work, based on their ability to find and alter features within Claude.

- Honesty about Claude's knowledge and limitations: @alexalbert__ shared insights on Claude's knowledge and limitations, emphasizing the importance of being honest about AI capabilities.

Open-Source AI Models and Advancements

Open-Source AI Models and Advancements

- Open-source models catching up to closed-source: @bindureddy highlighted that on the MMLU benchmark, open-source models like GPT-4o are nearing the performance of closed-source models like GPT-4 for simple consumer use-cases. However, more advanced models are still needed for complex AI agent and automation tasks.

- New open-source model releases: @osanseviero shared several new open-source model releases this week, including multilingual models (Aya 23), long context models (Yi 1.5, M2-BERT-V2), vision models (Phi 3 small/medium, Falcon VLM), and others (Mistral 7B 0.3).

- Phi-3 small outperforms GPT-3.5T with fewer parameters: @rohanpaul_ai pointed out that Microsoft's Phi-3-small model, with only 7B parameters, outperforms GPT-3.5T across language, reasoning, coding, and math benchmarks, demonstrating rapid progress in compressing model capabilities.

Stability.ai (Stable Diffusion) Discord

Perplexity AI Discord

- Perplexity AI outshines ChatGPT in CSV file processing by allowing direct CSV uploads, with Julius AI recommended for data analysis.

- Users prefer GPT-4 over Claude 3 Opus due to content restrictions on Claude 3 Opus.

- Upgrades to Pro Search raise discussions on multi-step reasoning features and API specs' true impact.

- API integration for external tools with Claude generates interest, with discussions on custom function calls and serverless backends.

- Ethics in AI discussions spark discourse on infusing GPTs with ethical monitoring capabilities for workplace communication and legal defensibility.

We can continue by exploring the content from the Stability.ai (Stable Diffusion) Discord section.

Detailed by-Channel Summaries and Links

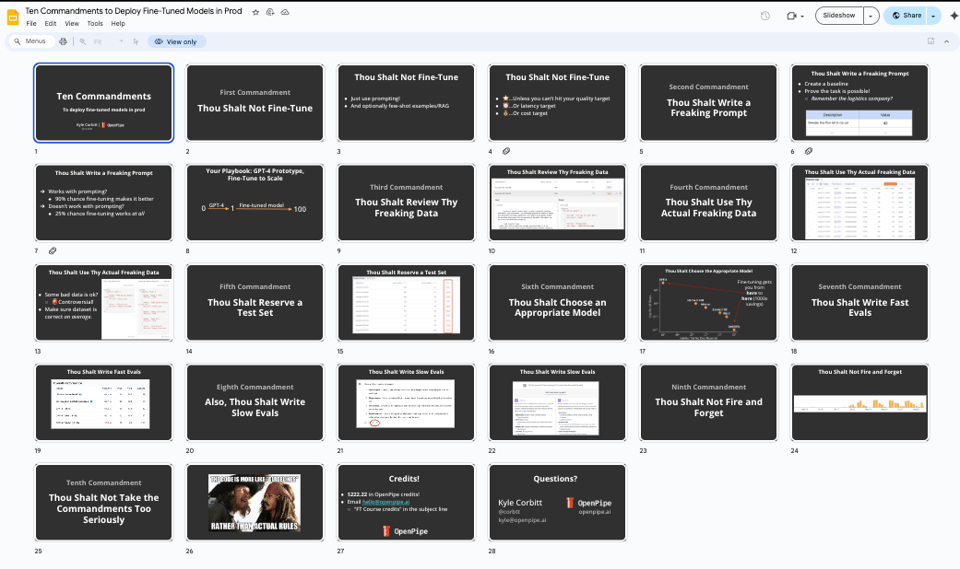

This section provides detailed summaries of discussions on various Discord channels related to LLM Finetuning, including topics such as concerns about overfitting in semantic similarity, queries about fine-tuning model processes, and changes in OpenAI platform sidebars. Additionally, insights into Rasa's conversational AI approach, a conference talk recording by Kyle Corbitt, and various useful links were shared.

LLM Finetuning Workshop Highlights

This section delves into the insights shared during LLM Finetuning workshops conducted by Hamel and Dan. It covers various topics such as template clarifications for LLM finetuning, the importance of delimiters in model behavior, the concept of 'end of text' token usage, and homework assignments related to LLM use cases. Additionally, it discusses the utilization of platforms like Modal for model training, dataset management, and examples provided for running models effectively. The section also includes links to resources mentioned during the workshops.

LLM Finetuning Discussion on Jarvislab, Tokenizer Gotchas Shared, and Learning Model Accelerate

LLM Finetuning Discussion on Jarvislab

- A user faced a RuntimeError related to BFloat16 on T4 GPU in Google Colab, leading them to switch to Jarvis-labs. Checking PyTorch and CUDA versions and switching to example configurations solved the issue.

Guide on Tokenizer Gotchas Shared

- A user shared Hamel's notes on tokenizer gotchas, addressing prompt construction intricacies and behavioral differences due to tokenization handling.

Optimizing Axolotl runs on different GPUs

- Advice was sought on adjusting accelerate configs for optimized axolotl runs on varied GPUs, suggesting mapping configs to axolotl yaml.

Resources for learning model Accelerate

- Discussion on getting started with Accelerate for finetuning tasks, recommending higher-level abstractions like axolotl for simplicity and depth of learning.

Hyperparameters and Inference precision

- Inquiry on optimal learning rates for extended vs. undertrained models and issues with BF16 precision in T4 GPUs, suggesting hardware-compatible solutions or transforming weights for supported datatypes.

HuggingFace AI Community Highlights

HuggingFace ▷ #today-im-learning (8 messages🔥):

-

A member shared enthusiasm for Deep RL for Embodied AI applications and invited detailed progress updates.

-

Fast.ai's part 1 & part 2 courses were recommended for AI beginners, covering practical deep learning tasks using HuggingFace libraries.

-

A Coursera course on Generative AI with Large Language Models was suggested for foundational knowledge in AI.

-

An event was announced for an in-depth review of the PixART diffusion model for text-to-image synthesis.

Links mentioned:

- Practical Deep Learning for Coders

- Generative AI with Large Language Models

- Arxiv Dives with Oxen.AI - Fine Tuning Diffusion Transformers

HuggingFace ▷ #cool-finds (3 messages):

-

A study was shared discussing using ChatGPT and other LLMs in next-gen drug discovery.

-

An integration of PostgresML with LlamaIndex promises new potentials in AI advancements.

Links mentioned:

AI Community Discussions

The AI community engaged in various discussions around new GPU models, including the 5090, and how they could affect data center GPUs like H100/A100. Additionally, there were talks about different AI models such as the Mobius model being touted as the best diffusion-based image model. Users also shared experiences and insights related to model training, performance concerns, and new model support announcements. The community also discussed issues with specific AI models, suggested improvements, and shared links to resources for further exploration.

Interpreting AI Model Performance and Capabilities

The section discusses different AI models' performance and capabilities, focusing on the Mobius model's impressive image generation, the debate on LLM understanding, and the shared technical repository for RLHF models on GitHub. It also touches on a new script using llama.cpp for function calls, discussions about the Hermes model, LoRA resources, and new developer introductions. Additionally, topics such as the training costs of models like GPT-3, RAG integration, and methods for enhancing LLM reasoning are explored in detail. The Eleuther section delves into discussions on JEPA and ROPE models, the Modula training strategy, the Chameleon model, and Bitune's impact on instruction-tuning in LLMs, among other research insights and debates.

LLM Studio Discussion Highlights

- Model Updates Announced: A new 35B model is on its way, with discussions on compatibility with the latest LM Studio version.

- Recommendations for Conversational Models: Suggestions were made for Wavecoder Ultra and Mistral-Evolved-11b-v0.1 models.

- Loading Issues with Specific Hardware: Users encountered loading problems with specific hardware configurations.

- CUDA Mode Dynamics: Topics included sparsity, model quantization, and challenges faced with diverse tasks.

- LLM Studio Performance: Issues like gradient spikes with batch size changes and addressing exploding gradients were addressed with effective solutions.

Modular (Mojo 🔥) Discussions

Funding Python Libraries' Port to Mojo:

A user questioned the availability of a budget to incentivize developers of major Python libraries like psycopg3 to port their work to Mojo. It was discussed that the fast-evolving API and lack of stable FFI story could potentially burn out maintainers if pursued prematurely.

Prospects of HVM for Various Languages:

There was a discussion about the HVM being used for running various programming languages like Python and Haskell, similar to JVM. Attention was drawn to an explanation by Victor Taelin about HVM's potential despite its current performance limitations.

Low-bit-depth networks spark debate:

Discussions on the utility of low-bit-depth networks for embedded AI systems emphasized the importance of potentially incorporating dedicated support in programming languages. Having an easy, language-supported means to specify that you wanted limited bit depth would be a big step to making small embedded AI systems.

Mojo function argument handling update:

A user highlighted a recent update on how Mojo processes function arguments, shifting from making copies by default to using borrowed conventions unless mutations occur. The update aims to improve consistency, performance, and ease of use, as outlined on GitHub changelog.

Benchmarking in Jupyter vs Compiling questioned:

A member asked about the reliability of benchmarking in a Jupyter notebook versus compiling. Another responded that one should benchmark in an environment similar to production and provided detailed tips to enhance precision, emphasizing compiled benchmarks and CPU isolation techniques.

Latent Space AI General Chat

This section includes a general chat in the Latent Space AI channel with 36 messages. Various discussions took place, such as improvements in wizard model responses, the release of Phi-3 Vision model, clarification on Llama 3 model prompt formatting, upcoming parameter updates for Llama 3 models, and issues with Google's Gemini API returning blank outputs. Users also shared test links for Phi-3 Vision and recommended the CogVLM2 model for vision tasks.

Tensorlake launches Indexify

- Members discussed the new open-source product by Tensorlake, called Indexify, which provides a real-time data framework for LLMs.

- The design choices behind Indexify were dissected, sparking interest in its creator's background with Nomad and questions about the sufficiency and monetization of the extractors provided.

- A post by Clementine, running the HF OSS Leaderboard, was shared. It delves into LLM evaluation practices and the significance of leaderboards and non-regression testing.

- A revelation by Mark Riedl about a website poisoning attack affecting Google's AI overviews led to discussions on using custom search engine browser bypasses to avoid such issues.

- Members discussed Thomas Dohmke's TED Talk on how AI is lowering the barriers to coding, expressing mixed feelings about its reliability but acknowledging UX improvements allowing quicker workarounds for issues.

Event Tracking and Channel Comments

- Member seeks Google Calendar integration for event tracking: A member inquired about the availability of an event calendar that could be imported into Google Calendar to avoid missing events. They expressed their concern with a sad emoji, indicating a need for a streamlined way to keep track of scheduled activities.

- Channel comment on general-ml: evelynciara expressed their satisfaction with the existence of the general-ml channel.

- URL shared in DiscoResearch general channel: datarevised shared a URL in the DiscoResearch general channel.

FAQ

Q: What is the significance of altering internal 'features' in AI models like Claude AI by Anthropic?

A: Altering internal 'features' in AI models like Claude AI can change their behavior and showcase new capabilities, as demonstrated by [@AnthropicAI](https://twitter.com/AnthropicAI/status/1793741051867615494?utm_source=ainews&utm_medium=email&utm_campaign=ainews-ten-commandments-for-deploying-fine-tuned) with the 'Golden Gate Claude'.

Q: How does the performance of open-source models compare to closed-source models, according to @bindureddy's insight?

A: Open-source models like GPT-4o are nearing the performance of closed-source models like GPT-4 on simple consumer use-cases, as highlighted by @bindureddy.

Q: What was the key highlight of Microsoft's Phi-3-small model, as pointed out by @rohanpaul_ai?

A: Microsoft's Phi-3-small model, with 7B parameters, outperforms GPT-3.5T across various benchmarks like language, reasoning, coding, and math, showcasing rapid progress in compressing model capabilities.

Q: In the Perplexity AI Discord discussions, why do users prefer GPT-4 over Claude 3 Opus?

A: Users prefer GPT-4 over Claude 3 Opus due to content restrictions on Claude 3 Opus, as highlighted in the Perplexity AI Discord discussions.

Q: What were the main points discussed during the LLM Finetuning workshops by Hamel and Dan?

A: The LLM Finetuning workshops covered topics such as template clarifications, the importance of delimiters in model behavior, 'end of text' token usage, and homework assignments related to LLM use cases.

Q: What were the insights shared in the AI community discussions around new GPU models and AI model training?

A: The AI community discussed topics like the Mobius model's performance, data center GPUs like H100/A100, model training experiences, performance concerns, and new model support announcements.

Q: What were the key discussions in the Latent Space AI channel chat with 36 messages?

A: Discussions included topics such as improvements in wizard model responses, the release of Phi-3 Vision model, Llama 3 model prompt formatting, upcoming parameter updates, and issues with Google's Gemini API.

Q: What insights were shared about the new open-source product, Indexify, by Tensorlake?

A: Indexify is a real-time data framework for LLMs developed by Tensorlake, sparking discussions on its design choices, creator's background with Nomad, and questions about the provided extractors' sufficiency and monetization.

Q: What was the discussion about a website poisoning attack affecting Google's AI overviews?

A: A website poisoning attack affecting Google's AI overviews was discussed, leading to talks about using custom search engine browser bypasses to avoid such issues.

Q: What prospects of using HVM for running various programming languages were highlighted in one of the discussions?

A: There was a discussion about using the HVM for running multiple programming languages like Python and Haskell, similar to the JVM, emphasizing the potential despite current performance limitations.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!