[AINews] Shazeer et al (2024): you are overpaying for inference >13x • ButtondownTwitterTwitter

Chapters

Memory and Caching Techniques for Efficient Inference

AI Model Releases and Benchmarks

Open-Source AI Developments and Collaborations

AI Stack Development Discussions

Discord Channels Discussions

Perplexity AI on Discord

HF Experiences Server Overload Amid New Model Releases

LM Studio General Discussions

ML-Drama

Interconnects, OpenInterpreter, OpenInterpreter, OpenInterpreter, OpenInterpreter, OpenInterpreter

Latent Space, AI Stack Devs, MLOps @Chipro Discussions

Memory and Caching Techniques for Efficient Inference

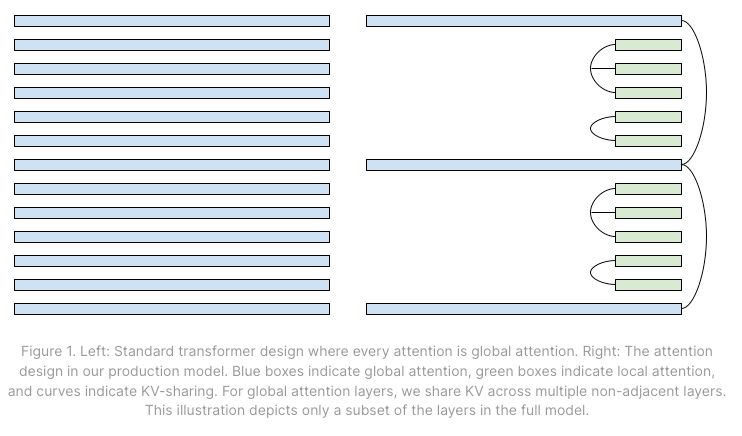

In this section, Noam Shazeer's blogpost discusses the advancements made by Character.AI in efficiently serving large batch sizes for LLM inference while reducing costs. The post highlights memory-efficient techniques including MQA over GQA, hybrid attention horizons, and cross-layer KV-sharing. Additionally, a stateful caching system is introduced to manage long dialogues in Character.AI chats. The blogpost also mentions the release of Claude 3.5 Sonnet by Anthropic with improved performance and new features. Furthermore, discussions on LLM architecture scaling, retrieval methods, and benchmarks in the AI community are outlined.

AI Model Releases and Benchmarks

AI Model Releases and Benchmarks

- Nous Research's Hermes 2 Theta 70B and Turbcat 8b claim benchmark victories surpassing models like Llama-3 Instruct, sparking discussions on Discord channels.

- Claude 3.5 Sonnet receives mixed reactions for its Python coding performance and JavaScript limitations compared to GPT-4, noted across various Discord discussions.

- Conversations arise on specialized coding models with the release of DeepSeek Coder v2, suggesting comparable performance to GPT4-Turbo.

AI Development Tools and Infrastructure Challenges

- Octomind's shift from LangChain prompts developers to explore alternatives like Langgraph, while discussions on hardware limitations for running advanced LLMs and GPU choices heat up in Discord channels.

- Groq's Whisper model boasting 166x real-time speeds catches attention and skepticism, with users delving into its potential applications and limitations.

Ethical Concerns in AI Industry Practices

- OpenAI's collaboration with governments raises questions about AI regulation and AGI safety strategies, sparking debates across Discord channels.

- Perplexity AI faces criticism over its practices, triggering dialogues on ethical considerations in AI development and deployment.

- OpenAI's public relations missteps receive attention in various Discord communities, leading to speculations about their impact on the company's image and strategies.

Open-Source AI Developments and Collaborations

- StoryDiffusion: An open-source alternative to Sora with MIT license has been launched, although weights are not released yet.

- OpenDevin: An open-source autonomous AI engineer based on Devin by Cognition has been released, accompanied by a webinar and increasing interest on GitHub.

- Machine Learning Paper Collaboration: Collaboration calls have been made for an open-source machine learning paper predicting IPO success, hosted at RicercaMente.

- LlamaIndex Integration Efforts: Community efforts are focused on LlamaIndex integration, with challenges faced in Supabase Vectorstore and package imports after updates. Documentation can be found in the llama-hub.

AI Stack Development Discussions

In the AI Stack Development Discord channel, members engage in a variety of conversations around AI models, hardware capabilities, error optimization, and more. Topics include resolving issues with GPUs, integrating Ollama models, challenges of running high-end LLMs, and utilizing frameworks like Langchain and Cohere. Updates on model releases, discord bot glitches, and tools like TinyGrad and Mojo Compiler are also shared. Additionally, the community discusses AI innovation, best practices, and the future direction of AI applications in software development.

Discord Channels Discussions

This section dives into various discussions happening in different Discord channels related to AI development. Some highlights include members reporting spam incidents, virtual meetup invites for Recommendation Systems enthusiasts, debates on data structures in PyTorch, reflections on AI-generated apologies, discussions on various topics related to OpenAI models, suggestions for improving torch compile times, and sharing innovative tricks for measuring processing time accurately. Each sub-section focuses on specific topics being discussed within the different Discord channels, providing insights into ongoing conversations and developments in the AI community.

Perplexity AI on Discord

Perplexity AI (General Chat)

- Members discussed using the Complexity extension to switch between different models like Opus, Sonnet 3.5, and GPT4-Turbo for coding performance. Some praised Sonnet 3.5 for its features and performance comparable to Opus.

- A debate emerged on the availability and functionality of Claude 3.5 Sonnet, with discussions on mobile displaying version inconsistencies.

- Limitations of Opus usage were highlighted, with a member expressing hesitance due to the daily cap. Users also debated on hardware choices for inference between TPU and Nvidia GPUs.

- Curiosity about the hardware used for inference at Anthropic led to a discussion on AWS Trainium for efficient ML training.

Perplexity AI (Sharing Chat)

- An analogy presented in 'Piloting AI' highlighted the evolving interaction with AI towards a problem-solving partnership.

- Hermes 2 Theta by Nous Research exceeded benchmarks set by Llama-3 Instruct 70B, showcasing advanced capabilities similar to GPT-4.

- A post on popular YouTube creators featured MrBeast, Like Nastya, and PewDiePie as leading figures.

- A study on African elephants using unique vocalizations to address each other showcased their advanced cognitive abilities.

- The impact of lithium-ion battery systems on off-road vehicles was explored, highlighting enhanced performance and cost benefits.

Perplexity AI (PPLX-API Chat)

- Users inquired about resetting API keys and limiting research to specific websites. Instructions were provided to manage API keys in the Perplexity settings page.

- Discussions centered on the possibility of limiting API research to specified websites, reminiscent of Google's site syntax but with no direct answer provided.

HF Experiences Server Overload Amid New Model Releases

- Claude 3.5 handles obscure programming languages: Claude 3.5 has shown remarkable progress by solving problems in a self-invented obscure programming language correctly. <em>"Claude 3.5 sonnet is insane."</em>

- HF experiences server overload amid new model releases: HuggingFace experiences server overload due to the influx of new model releases.

LM Studio General Discussions

Members discussed various topics related to LM Studio in this section. From fixing GPU utilization and running vision models on CPU to integrating Ollama models and resolving model issues, users shared valuable insights and solutions. They also explored issues with quantization, memory usage, and GPU offloading problems. Additionally, concerns about PCIe slots, adapters, and flash attention for LM Studio configuration were addressed. The discussions covered a wide range of technical aspects and tips to enhance the LM Studio experience.

ML-Drama

A member expressed frustration over Mira's PR mistakes with other members agreeing on the need for improvement. Nathan Lambert noted the lack of PR training for Mira, sparking a debate on its necessity. Furthermore, the discussion touched upon Nathan Lambert's switch to using Claude and the perception of Mira as a potential scapegoat within OpenAI to deflect criticism from higher-ups.

Interconnects, OpenInterpreter, OpenInterpreter, OpenInterpreter, OpenInterpreter, OpenInterpreter

The Interconnects section discusses messages about individuals connecting on Discord. This includes a user looking for someone named Snail. In the OpenInterpreter section, various topics are covered, such as a YouTube demo showcasing Windows/Linux compatibility, positive feedback on the Claude 3.5 Sonnet model, installation guidance for Open Interpreter on Windows, sharing of DeepSeek Coder v2, and a revelation about Open Interpreter's testing preference. Moving on to the OpenInterpreter AI content, a user shared an experience of a local AI connecting to the WiFi using a sticky note. In the following LLM Finetuning sections, discussions range from seeking AI events for consultancy grants, challenges with presentation links, issues with saving custom models using HF Trainer, and abandoning LangChain at Octomind due to its limitations. Finally, the LLM Finetuning sections discuss various topics like praising the Modal crew for support, a preference for Slack over Discord, and issues with replicating certain information.

Latent Space, AI Stack Devs, MLOps @Chipro Discussions

This section covers discussions from various channels including insights on AI opportunities, Groq's Whisper model support, music-to-text models, and the success of the MoA over GPT-4. Additionally, there are reports on spam activities in different channels and announcements for events like the RecSys Learners Virtual Meetup. The AI Quality Conference and utility inquiries about PyTorch's TensorDict and NestedTensor are also highlighted.

FAQ

Q: What advancements are made by Character.AI in serving large batch sizes for LLM inference while reducing costs?

A: Character.AI has made advancements in memory-efficient techniques such as MQA over GQA, hybrid attention horizons, and cross-layer KV-sharing. Additionally, a stateful caching system is introduced to manage long dialogues in Character.AI chats.

Q: What are some AI model releases and benchmark victories highlighted in the essai?

A: The essai mentions Hermes 2 Theta 70B and Turbcat 8b by Nous Research claiming benchmark victories surpassing models like Llama-3 Instruct. It also discusses the release of Claude 3.5 Sonnet by Anthropic with improved performance and new features, as well as the release of DeepSeek Coder v2 suggesting comparable performance to GPT4-Turbo.

Q: What ethical concerns are raised in the AI industry practices section of the essai?

A: Ethical concerns in AI industry practices include OpenAI's collaboration with governments raising questions about AI regulation and AGI safety strategies, criticism faced by Perplexity AI triggering dialogues on ethical considerations in AI development and deployment, and discussions on OpenAI's public relations missteps leading to speculations about their impact on the company's image and strategies.

Q: What are some highlighted tools and infrastructure challenges discussed in the essai?

A: The essai mentions Octomind's shift from LangChain, discussions on alternatives like Langgraph, hardware limitations for running advanced LLMs, GPU choices, Groq's Whisper model boasting high speeds, and challenges faced by developers in choosing hardware for inference.

Q: What are some examples of recent AI model releases mentioned in the essai?

A: Recent AI model releases mentioned in the essai include Claude 3.5 Sonnet by Anthropic, DeepSeek Coder v2, StoryDiffusion, OpenDevin, and Machine Learning Paper Collaboration predicting IPO success.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!