[AINews] Mozilla's AI Second Act • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

AI Discord Recap

AI Discord Conversations

Discord Community Updates

AI Discord Communities Updates

Exploring Custom Byte Encoding in LLMs

Ethics and Solutions in AI Training

CUDA MODE Highlights

Latent Space ▷ #llm-paper-club-west

Behavior and Bugs in Handling Variables

LangChain AI General

Mozilla AI Meetings Highlights

AI-Powered Academic Tools and GPA Saver Website

AI Twitter Recap

The AI Twitter Recap section provides updates on various topics discussed on Twitter related to AI technology and advancements. It includes information on new UI features in the Claude UI, hardware and performance benchmarks for specialized inference chips, theoretical GPU inference limits, open-source models outperforming established ones, and breakthroughs in biological AI with ESM3 simulating evolution to generate proteins.

AI Discord Recap

This section provides a comprehensive overview of recent advancements, discussions, and developments within the AI community on Discord. From LLM performance and benchmarking to optimizing LLM inference and training techniques, the section covers a wide range of topics. It highlights the emergence of new AI models like Granite-8B-Code-Instruct and RefuelLLM-2 pushing boundaries in code instruction and data tasks. Additionally, it delves into the exploration of multimodal AI for creative outputs, the integration of Stable Artisan Discord bot for media generation, and the innovative IC-Light project for image relighting. Discussions also extend to the AI hardware race, with debates on AMD's Radeon Instinct MI300X challenging Nvidia's GPU dominance, Etched's Sohu AI chip performance claims, and the ongoing debate between specialized AI chips and general-purpose GPUs for AI hardware acceleration.

AI Discord Conversations

The section highlights various discussions from different AI-related Discord servers, covering topics such as model performance, hardware advancements, open-source frameworks, generative models, and ethical considerations in AI. Notable points include advancements in AI chips like Etched's Sohu and discussions on NSFW content, model poisoning, and GPU performance. Additionally, there are talks on specialized AI hardware trends, optimization techniques, PyTorch integration for GPUs, and community debates on AI ethics and tools. The section provides valuable insights into the diverse conversations and innovations within the AI community.

Discord Community Updates

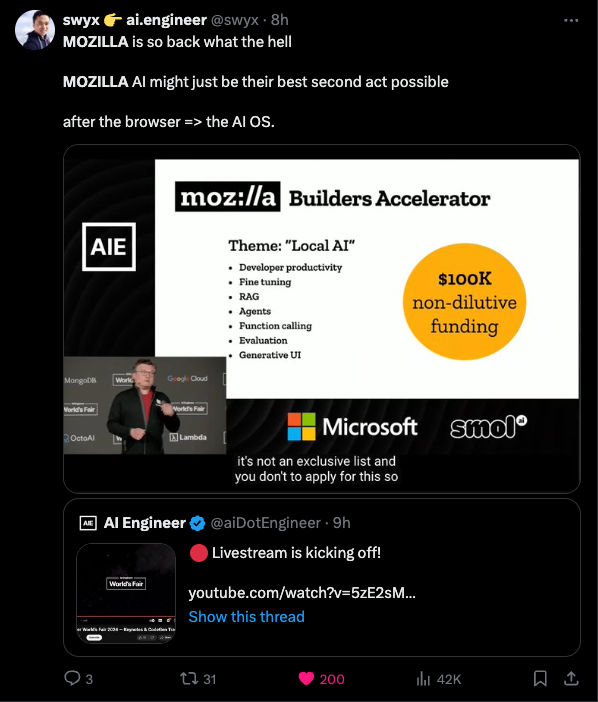

The section provides updates and discussions from various Discord communities focusing on a wide range of topics and activities happening within each community. From scam warnings and hardware conversations to innovative ideas, SDK expos, and AI chip performance debates, these communities are vibrant with discussions on AI-related matters. There are also talks about upcoming events such as AI Engineer World's Fair, challenges like moonDream's vision model compatibility, and technical issues like gradient accumulation and narrative engine optimization. Furthermore, the Mozilla AI Discord highlights programs like Builders Program and '90s nostalgia experiences with Firefox. Lastly, Tinygrad Discord discusses FPGA acceleration and the launch of Positron for high-efficiency AI hardware.

AI Discord Communities Updates

- Moderator Update - AI Stack Devs Discord: User angry.penguin was promoted to moderator to handle spam issues within the guild, showcasing proactive measures and successfully implementing anti-spam protocols. This has led to a cleaner and more focused discussion environment within the community.

- New AI Player and Academic Collaboration - OpenRouter Discord: OpenRouter introduced the language model 01-ai/yi-large specialized in various tasks and announced the GPA Saver platform that leverages AI for academic assistance. Efforts were appreciated for streamlining AI model integration, particularly beneficial for GPA Saver's development.

- German Encoders and Discussion - DiscoResearch Discord: Newly released German encoders aimed at knowledge-based applications and high performance were highlighted, along with discussions on the absence of gguf format for German V3b encoder. Members speculated on adapting encoders to gguf formats for improved performance.

Exploring Custom Byte Encoding in LLMs

In this section, members delve into the use of custom byte encoding for Large Language Models (LLMs) to predict sequences in UTF-32. The discussion covers potential issues with floating point accuracy and robustness, with one member expressing skepticism about its effectiveness but remaining curious about the results.

Ethics and Solutions in AI Training

The section discusses various messages related to research on poisoning models, the AIW+ problem, caution against manual evaluation, disagreement over solutions, Eleuther's announcements at ICML 2024, research on memorization in LMs, and general discussions on finding multimodal models, ICML social threads, Goldfinch model details, documenting OOD input handling in LLMs, and vision model recommendations. The debates involve topics like model training ethics, complexity of AIW+ problem, proper evaluation methods, and comparing different research papers and their implications. The community also explores the importance of data curation in multimodal learning, speculative use of homomorphic encryption in LLMs, and challenges in grokking and generalization within transformers.

CUDA MODE Highlights

- PyTorch Documentary Premiers: Members shared a YouTube link to the 'PyTorch Documentary Virtual Premiere: Live Stream' featuring key players from the project's early days to the present. An emoji reaction with a goat symbolizing excitement was noted.

- Adam-mini optimizer reduces memory usage: Adam-mini is proposed as an optimizer offering equivalent or better performance than AdamW while using 45% to 50% less memory. The GitHub repository contains code and implementation details.

- Raw Kernel for Linear Algebra in PyTorch: A user shared a link to the raw kernel in the PyTorch repository located in the native linear algebra section of the code. Discussions also included issues with tensor subclasses in PyTorch and open source contribution values.

Latent Space ▷ #llm-paper-club-west

Vectors in SQLite Shine:

Multiple users expressed excitement about the topic, with one noting “Vectors in SQLite 🔥” and another capturing a screenshot of a relevant slide.

Vector Databases Declare Dead:

A bold statement was made that 'vectordbs are dead,' which sparked reactions among the participants.

Slides Will Not Be Available:

When asked if the slides from the presentation would be available later, the response was a firm 'no,' disappointing some attendees.

AI Engineer Conference Hiccups:

The conference faced several issues, including a 10-minute late start leading to a canceled talk and OpenAI dropping out with less than 48 hours notice. Swyxio expressed frustration about audio issues, saying, 'this audio issue is pissing me off.'

YouTube Livestream for Follow-ups:

Swyxio referred attendees looking to catch up on missed content to the YouTube livestream for the 'AI Engineer World’s Fair 2024 — Keynotes & CodeGen Track.'

Behavior and Bugs in Handling Variables

Bugs related to compile-time evaluation and using static lifetime for references were discussed in this section. Regarding compile-time bugs, there was a report on issues with 'List' not working correctly at compile time in Mojo, and a problem with 'Tensor' leading to inconsistent results. To address lifetime issues with 'alias' items, the concept of 'ImmutableStaticLifetime' was introduced, similar to using 'let' for better management of static items.

LangChain AI General

Stream LangChain responses with .stream() method:

After importing LLM from langchain_community.chat_models and installing ollama, it is recommended to use .stream("query"). This method allows for iterating through tokens and printing them line by line.

Long-term memory with Zep looks promising:

Users are discussing the potential of using Zep for an AI's long-term memory, which can populate prompts with relevant facts from past conversations.

Using BytesIO for PDF in LangChain:

A user seeks a method to load a PDF document directly from a BytesIO object without creating a temporary file. The current workaround involves creating a temp file, which is seen as inefficient.

Streamlit with AgentExecutor and streaming responses:

Instructions provided for using StreamlitCallbackHandler to visualize thoughts and actions of an agent live in a Streamlit app. Users seek ways to handle streaming responses within this setup without using callback handlers.

LangSmith tracing issue troubleshooting:

A user inquires about LangSmith no longer tracing their project despite having set all required environment variables. The suggestion is to check if the trace quota has been exhausted.

Mozilla AI Meetings Highlights

Members of the Mozilla AI community participated in various discussions and activities during the recent meetings. Some highlights include:

- Experimenting with Websim.ai for webpage simulation

- Raising concerns about commercial abuse of Command-R by SpicyChat AI

- Announcing the release of Rig Rust Library for LLM-powered applications

- Discussing the optimization of memory usage with Adam-mini

- Seeking solutions for issues with CUDA errors during training

- Introducing Storiagl platform for story creation using LLMs

- Sharing updates on the Builders Program and early deadline reminder

- Exploring llamafile integration in Firefox for a nostalgic web experience

- Conversations around FPGA backend, transformer inference, and PyTorch documentary

- Releasing new German encoders and discussing finetuning methods

- Introducing the Yi Large model by OpenRouter LLC

- Appointing mods and discussing spam prevention strategies in the AI community

AI-Powered Academic Tools and GPA Saver Website

The section discusses the launch of the GPA Saver website that integrates AI for academic assistance. The website offers various academic tools such as an assistant chat, rapid quiz solver, PDF summarizer, interactive whiteboard, and flashcard generator. A special discount code 'BETA' is available for the first 100 users, providing approximately 37% off. The mention of GPA Saver encourages leveraging AI for academic studies.

FAQ

Q: What topics are covered in the AI Twitter Recap section?

A: The AI Twitter Recap section covers updates on UI features, hardware benchmarks, GPU limits, open-source models, and breakthroughs in biological AI.

Q: What are some of the discussions highlighted in the AI community on Discord?

A: Discussions highlighted include LLM performance, new AI models like Granite-8B-Code-Instruct and RefuelLLM-2, multimodal AI, AI hardware race, and ethical considerations in AI.

Q: What were the notable updates and discussions in various AI-related Discord communities?

A: Updates included advancements in AI chips like Etched's Sohu, discussions on NSFW content, model poisoning, GPU performance, AI hardware trends, PyTorch integration, and debates on AI ethics and tools.

Q: What are some of the recent developments in AI hardware mentioned in the essai?

A: The developments include debates on AMD's Radeon Instinct MI300X challenging Nvidia's GPU dominance, Etched's Sohu AI chip performance claims, and the ongoing debate between specialized AI chips and general-purpose GPUs for AI hardware acceleration.

Q: What significant collaborations and updates were highlighted in the essai?

A: Collaborations like OpenRouter introducing language model 01-ai/yi-large and academic collaboration with GPA Saver, advancements in German encoders, and updates on initiatives like Mozilla AI Builders Program, PyTorch documentary, and FPGA acceleration were highlighted.

Q: What bug-related discussions were held in the essai related to compile-time and static lifetime issues?

A: Discussions revolved around issues with 'List' not working correctly at compile time in Mojo, inconsistent results due to problems with 'Tensor,' and addressing lifetime issues with 'alias' items using 'ImmutableStaticLifetime' for better management.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!