[AINews] HippoRAG: First, do know(ledge) Graph • ButtondownTwitterTwitter

Chapters

Email Body Content

AI Twitter, Reddit, and Discord Recap

Community Tools, Tips, and Collaborative Projects

Nous Research AI Discord

Issues and Updates

FastHTML Features and Components in LLM Finetuning

LLM Finetuning Discussions

Perplexity AI - Perplexity releases official trailer for 'The Know-It-Alls'

HuggingFace Discussions Overview

Unsloth AI (Daniel Han) Community Collaboration

LM Studio Hardware Discussion

Nous Research AI - LlamaIndex General

Entity Resolution in Graphs

Usage and Developments in Data and Machine Learning Research

OpenAccess AI Collective (axolotl) General Section

AI Related Discussions

Continuation of Chunk

Email Body Content

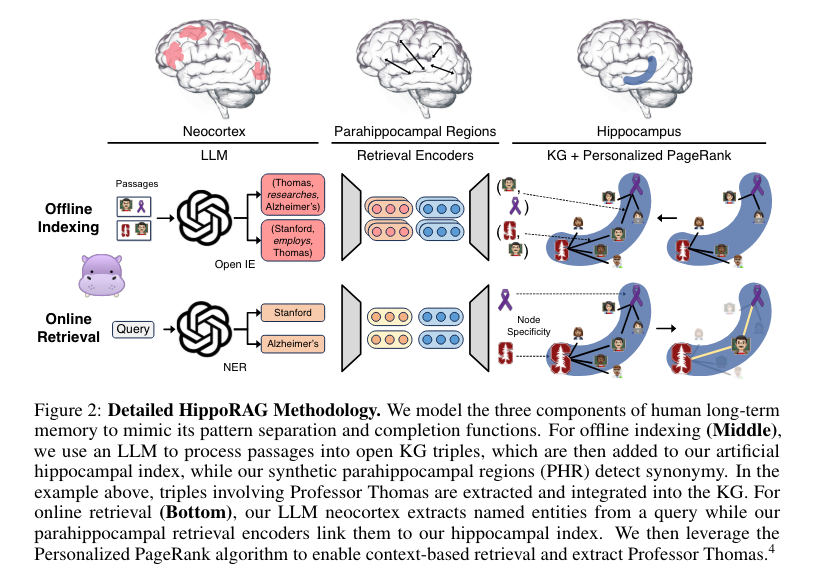

The email body content provides a summary of the AI News newsletter published on June 7, 2024. It includes a recap of AI news from various platforms like Twitter, Reddit, and Discord. The main focus is on the HippoRAG paper, which explores 'hippocampal memory indexing theory' to implement knowledge graphs and 'Personalized PageRank'. The paper introduces the concept of 'Single-Step, Multi-Hop retrieval' and offers a concise literature review on memory emulation in Large Language Models (LLMs).

AI Twitter, Reddit, and Discord Recap

This section provides a comprehensive overview of recent advancements and discussions in the field of AI from various social media platforms. It covers the release of new AI models and architectures, advancements in multimodal AI and robotics, updates on AI tooling and platforms, benchmarks and evaluations of AI models, as well as discussions and perspectives on AI. Additionally, it highlights Chinese AI models, discussions on AI capabilities and limitations, AI research and developments, AI ethics and regulation, AI tools and frameworks, and practical issues in AI model implementation.

Community Tools, Tips, and Collaborative Projects

AI Regulation, Safety, and Ethical Discussions:

- Andrew Ng's Concerns on AI Regulation mimic global debates on AI innovation stifling; comparisons to Russian AI policy discussions reveal varying stances on open-source and ethical AI (YouTube).

- Leopold Aschenbrenner's Departure from OAI sparks fiery debates on the importance of AI security measures, reflecting divided opinions on AI safekeeping (OpenRouter discussions).

- AI Safety in Art Software: Adobe's requirement for access to all work, including NDA projects, prompts suggestions of alternative software like Krita or Gimp for privacy-concerned users (Twitter thread).

Community Tools, Tips, and Collaborative Projects:

- Predibase Tools and Enthusiastic Feedback: LoRAX stands out for cost-effective LLM deployment, even amid email registration hiccups (Predibase tools).

- WebSim.AI for Recursive Analysis: AI engineers share experiences using WebSim.AI for recursive simulations and brainstorming on valuable metrics derived from hallucinations (Google spreadsheet).

- Modular's MAX 24.4 Update introduces a new Quantization API and macOS compatibility, enhancing Generative AI pipelines with significant latency and memory reductions (Blog post).

- GPU Cooling and Power Solutions discussed innovative methods for setting up Tesla P40 and similar hardware with practical guides (GitHub guide).

- Experimentation and Learning Resources provided by tcapelle include practical notebooks and GitHub resources for fine-tuning and efficiency (Colab notebook).

Nous Research AI Discord

Mixtral's Expert Count Revealed

An enlightening Stanford CS25 talk clears up a misconception about Mixtral 8x7B, revealing it contains 32x8 experts, highlighting the complexity behind its MoE architecture.

DeepSeek Coder Triumphs in Code Tasks

The DeepSeek Coder 6.7B takes the lead in project-level code completion, showcasing superior performance trained on a massive 2 trillion code tokens.

Meta AI Spells Out Vision-Language Modeling

Meta AI offers a comprehensive guide on Vision-Language Models (VLMs), detailing their workings, training, and evaluation for those interested in the fusion of vision and language.

RAG Formatting Finesse

The conversation around RAG dataset creation underscores the need for simplicity and specificity, rejecting cookie-cutter frameworks and emphasizing tools like Prophetissa for dataset generation.

WorldSim Console's Mobile Mastery

The latest WorldSim console update remedies mobile user interface issues, enhancing the experience with bug fixes on text input, enhanced !list commands, and new settings for disabling visual effects, all while integrating versatile Claude models.

Issues and Updates

This section discusses various issues and updates related to PyTorch Torchtune, AI Stack Devs Discord, OpenInterpreter Discord, DiscoResearch Discord, Datasette - LLM Discord, and tinygrad Discord channels. It includes clarifications on PyTorch nightly builds, PR for Torchtune installation, discussions on gaming studios, AI-powered 'Among Us' mod, AI advancements in text analysis, memory efficiency in model selection, and inquiries about different AI models and tools.

FastHTML Features and Components in LLM Finetuning

Members of the LLM Finetuning discussion group engaged in a conversation about FastHTML features and components, expressing excitement and curiosity about the technology. They compared FastHTML favorably to FastAPI and Django, discussing detailed explanations on creating apps, connecting to multiple databases, and using libraries like picolink and daisyUI. Jeremy and John also shared contributions and future plans for adding markdown support, community-built component libraries, and creating easy-to-use FastHTML libraries for popular frameworks like Bootstrap or Material Tailwind. The conversation further delved into rendering markdown in FastHTML using scripts and NotStr classes, as well as the integration of HTMX in FastHTML for various functionalities like handling keyboard shortcuts and database interactions. Additionally, members discussed coding tools and environments, touching upon Cursor and Railway platforms, and shared FastHTML-related resources such as tutorials and GitHub repositories.

LLM Finetuning Discussions

LLM Finetuning Discussions

- A user searches for a Python script for FFT on Modal.

- Chat templates for Mistral Instruct are discussed.

- Dialogue about the inadvisability of combining Axolotl and HF templates occurs.

- A user queries about token space assembly in Axolotl.

- An issue with 7B Lora merge resulting in extra shards is discussed.

- Members in various channels report issues regarding credits.

- A new feature by OpenAI allowing disabling parallel function calling is highlighted.

- OpenAI credits expiry, access issues to GPT-4, and third-party tool integration are detailed.

- Exciting talk and resources shared by tcapelle in the Capelle Experimentation channel, including fine-tuning tips and insights on learning and pruning.

- Weave Toolkit Integration and community ideas are discussed, emphasizing the engaging and supportive nature of the group.

Perplexity AI - Perplexity releases official trailer for 'The Know-It-Alls'

- Perplexity AI shared a link to the YouTube video titled 'The Know-It-Alls' in their announcements.

- The video poses the intriguing question, 'If all the world’s knowledge were at our fingertips, could we push the boundaries of what’s possible?'

- The Perplexity AI Discord community reacted to the video, with members discussing and sharing their thoughts.

HuggingFace Discussions Overview

- Virtual Environments and Package Managers: Members discuss the use of conda or pyenv for managing Python environments, highlighting challenges and preferences.

- GPT and PyTorch Version Compatibility: Discussions on Python 3.12 not supporting PyTorch and the struggle of maintaining compatibility across projects.

- HuggingFace and Academic Research Queries: Feasibility of using HuggingFace AutoTrain for academic projects and concerns about service limitations and API costs.

- AI for Click-through Rate and Fashionability: Utilizing AI to predict clickable YouTube thumbnails and fashionable clothing, considering reinforcement learning methods.

- Gradio Privacy Concerns: Issues regarding privacy settings in Gradio apps and updating versions across repositories.

- Collaboration Request and TorchTune Introduction: A senior developer seeks collaboration on an AI project, and a member shares TorchTune, a PyTorch library for LLM fine-tuning.

- Discussion on Fluently XL Usage and Reading Group: Experience shared on using Fluently XL for text-to-image, plans for a coding tutorial reading group, and a demo of a multi-agent system.

- Introduction to Runtime Detection Crate and Droid with Dolphin-Llama3 Model: Announcements about a new Rust crate for runtime environment detection and a comical scenario of a droid running the Dolphin-LLama3 model.

- GitHub Repository for HuggingFace Reading Group and Various Meetings: Compilation of past HuggingFace reading group presentations, papers on neural network inductive bias, and multi-node LLM fine-tuning.

- Diffusion Discussions on Text-to-Image Generation and Model Training: Exploration of Diffusers for text-to-image scripts, optimized inference approaches, and training models from scratch.

Unsloth AI (Daniel Han) Community Collaboration

Friendly Invitation: "You should better invite em here!" and reassurance that "We are more friendlier" showcase the community's welcoming nature. Community Praises: A new member expressed satisfaction: "ahhaa i just joined the discord server it's very nice". Another added "thank you sharing!" reflecting gratitude and positive engagement within the group. Member Recognition: Highlighted key members by stating "no one beats @1179680593613684819 or @160322114274983936", acknowledging their valued contributions to the community.

LM Studio Hardware Discussion

The discussion in this section revolves around various topics related to hardware issues and modifications. Users discuss benchmark scores and cooling challenges with GPUs, including the difficulties of fitting a cooling solution for a Tesla P40 GPU. There are also mentions of MacGyver-like cooling mods using cardboard solutions and power supply concerns when connecting a Tesla P40 GPU. Additionally, there is a humorous conversation about the unconventional but necessary modifications users make to their hardware setups.

Nous Research AI - LlamaIndex General

Despite issues with the query engine not reflecting immediate changes in the VectorStoreIndex, one solution is reloading the index periodically to ensure it uses the latest data. This ensures the RAG app can answer queries with new data dynamically. Recommendations for index management include using separate indexes or applying metadata filters. Users discussed how to create property graphs with embeddings using LlamaIndex and the benefit of attaching text embeddings from entities and their synonyms directly to entity nodes in the knowledge graph.

Entity Resolution in Graphs

Performing entity resolution can involve defining a custom retriever to locate and combine nodes, utilizing methods like manual deletion and upsert as highlighted by the provided delete method example.

Usage and Developments in Data and Machine Learning Research

Using the principles of data valuation and selection is vital in data-centric machine learning (ML) research. Various developments in the ML field were discussed, including projects like the Thousand Brains Project, new autoregressive models challenging fixed orders, and methodologies like ReST-MCTS for improved LLM training quality. Furthermore, debates on open-ended AI systems, sparse autoencoders for interpretability, and frameworks for language model evaluation were also highlighted. These discussions illustrate the evolving landscape of research and innovation in the ML domain.

OpenAccess AI Collective (axolotl) General Section

The general section of OpenAccess AI Collective (axolotl) on Discord includes discussions on Torch Tune availability, Japanese-specialized models, issues with Qwen2 72b finetuning, model performance, and a pull request for distributed finetuning guide. The debates cover various topics such as Axolotl API usage, Flash-attn RAM requirements, and a discussion on AI safety in art software. The section also addresses struggles with JSONL test splits, datasets, distributed finetuning guides, and issues with installing Nvidia Apex on slurm. Additionally, the community shares experiences with building flashattention on slurm, creating a cluster-specific CUDA load module, and potential guides for installing Megatron on slurm.

AI Related Discussions

This section of the web page highlights various discussions related to AI and technology in different Discord channels. Some topics include exporting custom phi-3 models in Torchtune, clarifications on HF format for model files, interest in n_kv_heads for mqa/gqa, dependencies versioning for Torchtune, supporting PyTorch nightlies, and discussions on AI integration at the OS level. Additionally, there are talks about Mixtral experts, parameter counts, and the potential integration of LLMs into web platforms. Other topics cover exploring concept velocity in text, dimensionality reduction for embeddings, and challenges in understanding proofs. The content also mentions upcoming PRs, struggles with tool use in LM Studio, and potential enhancements in AI Town. The section concludes with expressions of gratitude and acknowledgments within the different Discord channels.

Continuation of Chunk

This section does not contain any content, it is a placeholder for the continuation of the previous chunk.

FAQ

Q: What is the main focus of the HippoRAG paper discussed in the AI News newsletter?

A: The main focus of the HippoRAG paper is on 'hippocampal memory indexing theory' to implement knowledge graphs and 'Personalized PageRank'.

Q: What concept does the HippoRAG paper introduce in relation to memory emulation in Large Language Models (LLMs)?

A: The HippoRAG paper introduces the concept of 'Single-Step, Multi-Hop retrieval' in relation to memory emulation in Large Language Models (LLMs).

Q: What are some of the advancements and discussions covered in the AI News newsletter related to AI tooling and platforms?

A: The AI News newsletter covers advancements in multimodal AI and robotics, updates on AI tooling and platforms, benchmarks and evaluations of AI models, as well as discussions and perspectives on AI.

Q: What are the key aspects discussed in the AI Regulation, Safety, and Ethical Discussions highlighted in the newsletter?

A: The newsletter addresses concerns on AI regulation stifling innovation, debates on open-source and ethical AI, the importance of AI security measures, privacy issues in AI software, and comparisons of AI policies across different regions.

Q: What are some of the community tools and projects discussed in the AI News newsletter section?

A: The newsletter mentions tools like Predibase for LLM deployment, WebSim.AI for recursive analysis, updates by Modular's MAX 24.4, and discussions on GPU cooling solutions and learning resources for fine-tuning AI models.

Q: What does the AI News newsletter highlight about the Meta AI Vision-Language Models (VLMs) guide?

A: The newsletter offers a comprehensive guide on Vision-Language Models (VLMs) by Meta AI, detailing their workings, training, and evaluation.

Q: What information is shared about the RAG dataset creation in the AI News newsletter?

A: The conversation around RAG dataset creation emphasizes the need for simplicity and specificity, rejecting generic frameworks and suggesting tools like Prophetissa for dataset generation.

Q: What updates are mentioned regarding the WorldSim console in the AI News newsletter?

A: The latest WorldSim console update addresses mobile user interface issues, enhances user experience with bug fixes and new settings, and integrates versatile Claude models for improved functionality.

Q: What hardware-related issues are discussed in the AI News newsletter?

A: The newsletter mentions discussions on benchmark scores, GPU cooling challenges, and modifications like MacGyver-like solutions for cooling GPUs and power supply concerns.

Q: What are some of the key topics covered in the discussion around LLM Finetuning in the AI News newsletter?

A: The AI News newsletter covers topics like Python scripts for FFT, chat templates for Mistral Instruct, discussions on token space assembly, issues with Lora merge, OpenAI credits expiry, and more related to LLM Finetuning.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!