[AINews] Gemini launches context caching... or does it? • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

AI Discord Recap

Tinygrad (George Hotz) Discord

Torchtune Discord

CUDA Mode Discussions

Comparisons with Other Tools

Modular (Mojo 🔥) Discussions

Interconnects (Nathan Lambert) Posts

Perplexity AI Sharing

LLM Finetuning Discussions

LangChain AI Discussion

AI-Content in OpenInterpreter & AI Stack Devs Discussion

AI Twitter Recap

AI Twitter Recap

-

DeepSeek-Coder-V2 outperforms other models in coding: @deepseek_ai announced the release of DeepSeek-Coder-V2, a 236B parameter model that beats GPT4-Turbo, Claude3-Opus, Gemini-1.5Pro, and Codestral in coding tasks. It supports 338 programming languages and extends context length from 16K to 128K.

-

Technical details of DeepSeek-Coder-V2: @rohanpaul_ai shared that DeepSeek-Coder-V2 was created by taking an intermediate DeepSeek-V2 checkpoint and further pre-training it on an additional 6 trillion tokens, followed by supervised fine-tuning and reinforcement learning using the Group Relative Policy Optimization (GRPO) algorithm.

-

DeepSeek-Coder-V2 performance and availability: @_philschmid shared details about the performance improvements of DeepSeek-Coder-V2 compared to the previous version. The model's accuracy increased by 12.5% across multiple benchmark coding tasks, and the model is now available for public use and benchmarking. If you are interested in the technical details and performance metrics, check out the shared tweet.

AI Discord Recap

This section provides a recap of discussions and developments in various AI-related Discord channels. It includes updates on new AI models, technical optimizations, discussions on licensing issues, and community concerns. There are insights on model performance, platform compatibility, and future technology trends discussed among the members. The section also covers topics like service interruptions, API usage challenges, ethical debates, and ongoing research in AI development.

Tinygrad (George Hotz) Discord

A pull request is made to round floating points for code clarity in tinygrad, along with a new policy against low-quality submissions. An upgrade in OpenCL error messages is proposed. Discussions revolve around the impacts of 'realize()' on operation outputs and explore how kernel fusion can be influenced by caching and explicit realizations.

Torchtune Discord

- CUDA vs MPS: Beware the NaN Invasion: Engineers discussed an issue where

nanoutputs appeared on CUDA but not on MPS, linked to differences in kernel execution paths forsoftmaxoperations in SDPA. * Cache Clash with Huggingface: Concern arose over system crashes during fine-tuning with Torchtune due to Huggingface's cache overflowing. * Constructing Bridge from Huggingface to Torchtune: A detailed process for converting Huggingface models to Torchtune format was shared, emphasizing Torchtune Checkpointers for weight conversion and loading. * The Attention Mask Matrix Conundrum: Discussions revolved around the proper attention mask format for padded token inputs to ensure correct application of the model's focus. * Documentation to Defeat Disarray: Links to Torchtune documentation, including RLHF with PPO and GitHub pull requests, were provided to assist with implementation details and facilitate knowledge sharing among engineers.

CUDA Mode Discussions

Discussions in the CUDA mode channel featured debates on various topics related to AI technologies and hardware. Members discussed speculations on Nvidia 5090's RAM, stagnation of AI capabilities, and RDNA4 and Intel Battlemage competition. Additionally, there were conversations about search algorithms, model architecture advancements, and the use of Cloud TPUs with JAX. The discussions also delved into PR reviews, quantization API syntax, and the implementation of different model architectures. Links to related resources and GitHub discussions were shared for further exploration.

Comparisons with Other Tools

Discussions in this section often referenced alternate tools and software. One user mentioned swapping to Pixart Sigma, noting good prompt adherence but issues with limbs. Other users recommended different models and interfaces for various use cases, including StableSwarmUI and ComfyUI.

Modular (Mojo 🔥) Discussions

standard library enhancements, including improvements in collections, new traits, and os module features saw 214 pull requests from 18 community contributors, resulting in 30 new features, making up 11% of all enhancements. The release included discussions on exploring JIT Compilation and WASM, evaluating MAX Graph API, Mojo Traits and Concept-like Features, Future of GPU Support and Training in MAX, and Debate on WSGI/ASGI Standards. Additional discussions in this section involved Cohere Data Submission, Collision Conference Attendance, Command-R Bot's Conversational Focus, Networking and Career Tips, and Internship Application Insights. Further discussions in the LM Studio section included topics like Deepseek Coder V2 Lite setup caution, LM Studio and Open Interpreter guidelines, help requests for local model loading issues, tips for using AMD cards with LM Studio, Meta's new AI models announcement, issues with Gemini Model code generation, struggles with model configuration, RX6600 GPU performance discussion, LM Studio beta releases, and Open Interpreter configuration and usage methods.

Interconnects (Nathan Lambert) Posts

SnailBot, the community moderator, issues a call to the community, and Nathan Lambert shows affection and enthusiasm by adding cute snail emojis. It's a playful and light-hearted interaction that brings a sense of fun to the community discussions.

Perplexity AI Sharing

Perplexity AI Sharing

<ul> <li><strong>Jazz Enthusiasts Delight in New Orleans Jazz</strong>: Links to pages like [New Orleans Jazz 1](https://www.perplexity.ai/page/New-Orleans-Jazz-vUaCB8pUTjeg0I56lgYNkA?utm_source=ainews&utm_medium=email&utm_campaign=ainews-to-be-named-9364) and [New Orleans Jazz 2](https://www.perplexity.ai/page/New-Orleans-Jazz-vUaCB8pUTjeg0I56lgYNkA?utm_source=ainews&utm_medium=email&utm_campaign=ainews-to-be-named-9364) were shared, showcasing information on this vibrant genre. These pages likely dive into the rich cultural tapestry and musical legacy of New Orleans jazz.</li> <li><strong>Discovers and Searches Abound</strong>: Various members shared intriguing search queries and results including [verbena hybrid](https://www.perplexity.ai/search/verbena-hybrid-T6rro2QKSkydZz71snAhRw?utm_source=ainews&utm_medium=email&utm_campaign=ainews-to-be-named-9364) and [Gimme a list](https://www.perplexity.ai/search/gimme-a-list-2JeEyTVgR5aySZ1KthSCWQ?utm_source=ainews&utm_medium=email&utm_campaign=ainews-to-be-named-9364#0). These links direct to resources on the Perplexity AI platform, highlighting the diverse interests within the community.</li> <li><strong>Perplexity's In-Depth Page</strong>: A link to [Perplexity1 Page](https://www.perplexity.ai/page/Perplexity1-LSIxDHzpRQC2v.4Iu25xtg?utm_source=ainews&utm_medium=email&utm_campaign=ainews-to-be-named-9364) was shared, offering presumably comprehensive insights about Perplexity AI's functionalities. It presents a chance for users to delve deeper into the mechanics and applications of Perplexity AI.</li> <li><strong>YouTube Video Discussed</strong>: A YouTube video titled 'YouTube' was shared with an inline link to the video: [YouTube](https://www.youtube.com/embed/iz5FeeDBcuk?utm_source=ainews&utm_medium=email&utm_campaign=ainews-to-be-named-9364). Its description is undefined, but it appears to discuss recent noteworthy events including the US suing Adobe and McDonald's halting their AI drive-thru initiative.</li> <li><strong>Miscellaneous Searches Shared</strong>: Additional search results like [Who are the](https://www.perplexity.ai/search/who-are-the-pr0f9iy7S1S2bFUfccsdKw?utm_source=ainews&utm_medium=email&utm_campaign=ainews-to-be-named-9364#0) and [Trucage Furiosa](https://www.perplexity.ai/search/trucage-furiosa-_80nrrvwS2GCpAlC3bVatg?utm_source=ainews&utm_medium=email&utm_campaign=ainews-to-be-named-9364) were shared. This indicates ongoing community engagement with a variety of topics through the Perplexity AI platform.</li> </ul> <p><strong>Link mentioned</strong>: [YouTube](https://www.youtube.com/embed/iz5FeeDBcuk?utm_source=ainews&utm_medium=email&utm_campaign=ainews-to-be-named-9364): no description found</p>LLM Finetuning Discussions

LLM Finetuning Discussions:

- Modal gets speedy credit service: A member appreciates the quick credit service and plans to share their experience in detail later.

- Checkpoint volumes lagging behind: A member experiences delays with checkpoint volumes updating. They inquire about expected behavior and mention a specific example.

- LangSmith Billing Issue: A user seeks help with LangSmith billing problems and provides their org ID.

- Experimenting with SFR-Embedding-Mistral: A member shares unusual similarity score behavior and seeks explanations and solutions.

- Clarification on Similarity Scores: A member questions an error in similarity scores presentation by another member.

- Course Enrollment Confirmation: A member mentions not indicating course enrollment on a questionnaire and ensures the information is received by notifying another user.

- Server Disconnected Frustration: A member faces server disconnection issues hindering their task progress.

- Token Limits Explained: Another member explains the token limits for users with serverless setup.

- User requests credit assistance: Assistance requested for credit issues without detailed resolution provided.

- Acknowledgment of receipt: Confirmation of receipt with a thank you message.

- Platform access confirmed: A user expresses concern about seeing an upgrade button but is reassured about platform access.

- Credits expiration clarified: Inquiry about credit expiration is clarified.

- Midnight Rose 70b price drop: Significant decrease in the price of Midnight Rose 70b.

- Users debate understanding of Paris plot in logit prisms: Confusion and clarification on the Paris plot concept in logit prisms.

- Logit prisms and its relationship to DLA: Discussion on the relationship between logit prisms and direct logit attribution.

- Member seeks paper on shuffle-resistant transformer layers: Request for a paper discussing transformer models' resilience to shuffled hidden layers.

LangChain AI Discussion

Uther

- Passing vLLM arguments directly into the engine: Users discuss passing vLLM arguments directly into the engine.

LangChain AI

- New User Struggles with LangChain Version: A new member is frustrated with the version difference of LangChain from online tutorials.

- Extracting Data from Scraped Websites: Users seek help in extracting data from websites using LangChain's capabilities.

- Issue with LLMChain Deprecation: Confusion over deprecation of LLMChain class in LangChain 0.1.17.

- Debugging LCEL Pipelines: A member asks for ways to debug LCEL pipeline outputs.

- Handling API Request Errors in Loop: A member encounters errors while looping through API calls.

LangChain AI Share-Your-Work

- Building Serverless Semantic Search: Member shares about building a serverless app with AWS Lambda and Qdrant.

- AgentForge launches on ProductHunt: AgentForge is live on ProductHunt.

- Advanced Research Assistant Beta Test: Member seeks beta testers.

- Environment Setup Advice: Article advises separating environment setup from application code.

- Infinite Backrooms Inspired Demo: Member introduces YouSim.

Tinygrad (George Hotz)

- Code clarity issues in graph.py: Discussion on code clarity in graph.py.

- Pull Request for graph float rounding: Announced pull request for graph float rounding.

Tinygrad (George Hotz) Learn-Tinygrad

- Users discuss various topics like tensor realization, kernel generation, kernel fusion, custom accelerators, and scheduler deep dive.

Laion

- Users discuss RunwayML, DeepMind's video-to-audio research, Meta FAIR's research artifacts, Open-Sora Plan, and img2img model requests.

LAION Research

- Discussions on conversational speech datasets and UC Berkeley's weight space discovery.

Torchtune

- Members discuss MPS vs CUDA output discrepancy, Huggingface cache issue, Huggingface to Torchtune model conversion, and attention mask clarification.

Latent Space AI General Chat

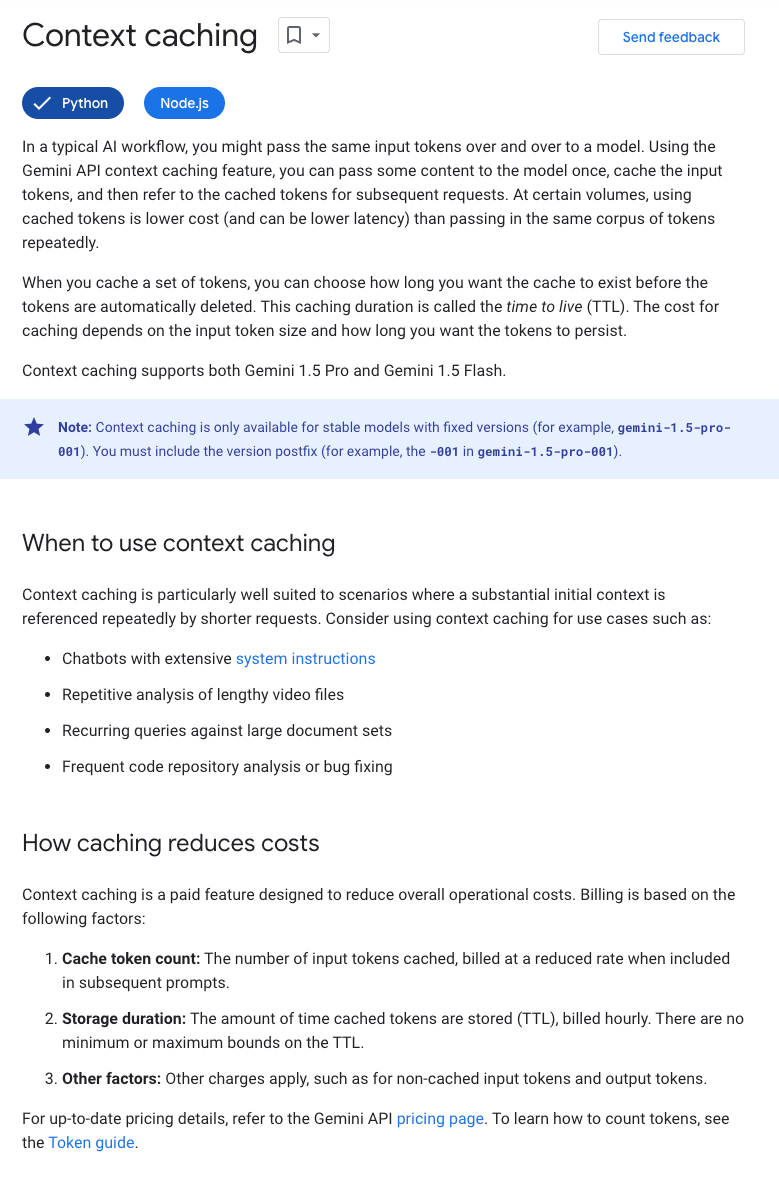

- Discussions on SEO-generated articles, Werner Herzog reading AI output, podcast tools, Meta's new AI models, and Google Gemini API introducing context caching.

AI-Content in OpenInterpreter & AI Stack Devs Discussion

The section discusses updates in OpenInterpreter, including the release of Open Interpreter’s Local III with computer-controlling agents that work offline. Additionally, a tool for automatically naming photos descriptively offline was introduced. In the AI Stack Devs discussion, a system called Agent Hospital simulating the treatment of illnesses with autonomous agents powered by LLMs was introduced. The real-world application of Agent Hospital was discussed, highlighting its benefits for real-world medicare benchmarks.

FAQ

Q: What is DeepSeek-Coder-V2 and how does it outperform other models in coding tasks?

A: DeepSeek-Coder-V2 is a 236B parameter model created by @deepseek_ai, which surpasses models like GPT4-Turbo, Claude3-Opus, Gemini-1.5Pro, and Codestral in coding tasks. It supports 338 programming languages and extends context length from 16K to 128K.

Q: What were the technical details shared about DeepSeek-Coder-V2 by @rohanpaul_ai?

A: According to @rohanpaul_ai, DeepSeek-Coder-V2 was built by further pre-training an intermediate DeepSeek-V2 checkpoint on an additional 6 trillion tokens. It underwent supervised fine-tuning and reinforcement learning using the Group Relative Policy Optimization (GRPO) algorithm.

Q: What performance improvements were highlighted for DeepSeek-Coder-V2 by @_philschmid?

A: DeepSeek-Coder-V2 saw a 12.5% increase in accuracy across multiple coding tasks compared to the previous version. The model is now publicly available for use and benchmarking.

Q: What were some of the discussions and developments in AI-related Discord channels mentioned?

A: The discussions covered new AI models, technical optimizations, licensing issues, community concerns, model performance, platform compatibility, future technology trends, service interruptions, API challenges, ethical debates, and ongoing research in AI development.

Q: What were the topics discussed in the CUDA mode channel related to AI technologies and hardware?

A: Discussions in the CUDA mode channel included debates on Nvidia 5090's RAM, AI capabilities stagnation, RDNA4 and Intel Battlemage competition, search algorithms, model architecture advancements, Cloud TPU usage with JAX, PR reviews, quantization API syntax, and different model architecture implementations.

Q: What were some of the updates in the OpenInterpreter section?

A: In the OpenInterpreter section, updates included the release of Open Interpreter’s Local III with computer-controlling agents for offline work, a tool for naming photos descriptively offline, and discussions on Agent Hospital simulating illness treatment with LLM-powered autonomous agents.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!