[AINews] FlashAttention 3, PaliGemma, OpenAI's 5 Levels to Superintelligence • ButtondownTwitterTwitter

Chapters

AI News FlashAttention 3, PaliGemma, OpenAI's 5 Levels to Superintelligence

AI Discord Recap

Stability.ai (Stable Diffusion)

Discord Discussions on Various AI Topics

HuggingFace Discussions

Unsloth AI and CUDA Modes Discussions

CUDA Mode Torchao

LM Studio Discussions

Perplexity AI and AWS Collaboration

OpenAI's New Models

OpenInterpreter Updates

Interconnects News and Discussions

AI News FlashAttention 3, PaliGemma, OpenAI's 5 Levels to Superintelligence

The article provides a recap of AI News focusing on FlashAttention 3, PaliGemma, and OpenAI's 5 Levels to Superintelligence. It details the innovations in FlashAttention-3 optimized for H100 GPUs, the release of PaliGemma as a 3B open Vision-Language Model (VLM), and OpenAI's insights on superintelligence levels. Additionally, the section includes a Twitter recap by Claude 3.5 Sonnet and a Reddit recap covering advancements in open-source AI models, including NuminaMath 7B and updates on LLMs in coding. Noteworthy discussions on Reddit include the performance of open LLMs compared to closed models and the development of a Llama 3 8B model focusing on response format accuracy.

AI Discord Recap

The section provides summaries of various discussions and developments from different AI Discord channels, including updates on AI models, hardware and infrastructure advancements, AI research partnerships, and industry developments. It covers topics like GPU innovations, quantized language models, generative teaching, and advancements in AI research techniques. Each channel features unique conversations and insights, shedding light on the dynamic landscape of artificial intelligence and its diverse applications.

Stability.ai (Stable Diffusion)

Blackstone announces plans to invest $50B in AI infrastructure, creating market excitement. An in-depth survey on AI agent architectures initiates debates on future design enhancements. ColBERT's efficient dataset handling sparks discussions on various applications. The ImageBind paper showcases impressive cross-modal task performance, hinting at new directions for multimodal AI research. SBERT's distinct sentence embeddings show promise for natural language processing tasks.

Discord Discussions on Various AI Topics

This section covers discussions from different Discord channels related to advancements and challenges in the AI field. Topics include comparing different AI models like GPT-4o and Llama3, documentation discrepancies in 01's services, scripting 01 for VPS interaction, collaborative community coding, leveraging FlashAttention-3 for performance enhancement, dataset format migration in Axolotl, TurBcat compatibility tests, WizardLM's innovative learning approach, and more. The conversations delve into technical details, community collaborations, and industry implications of various AI technologies.

HuggingFace Discussions

HuggingFace ▷ #today-im-learning (2 messages):

- Triplet Collapse in Embedding Models Explained: A member asked for background on triplet collapse and received an explanation on using triplet loss for training an embedding model that identifies individuals based on their mouse movements.

- Transfer Learning with Pre-trained Softmax Model: To mitigate triplet collapse, the member explained pre-training a regular classification model with N softmax outputs and transferring it to the embedding model.

- This method addresses the issue of the model producing zero-embeddings by starting with a pre-trained network, avoiding a local minima loss scenario.

Unsloth AI and CUDA Modes Discussions

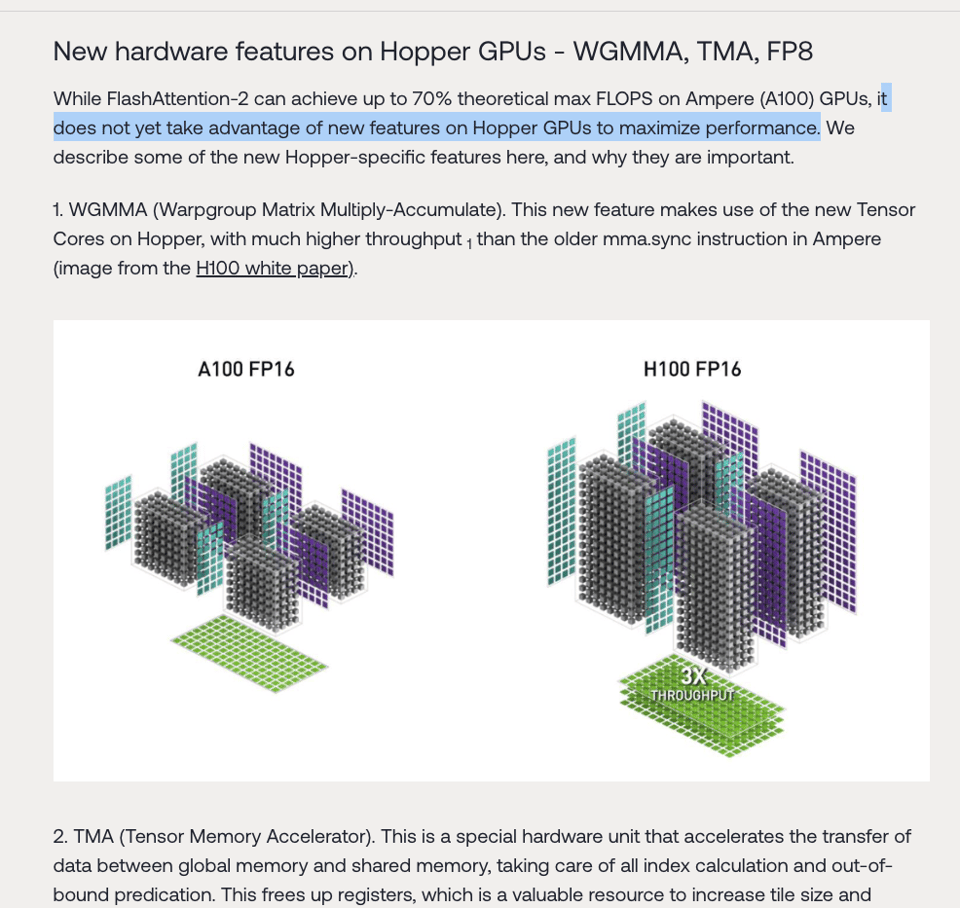

This section continues with discussions related to Unsloth AI and CUDA modes on various topics. It covers discussions about decentralized AI training, challenges in distributed GPU workloads, and optimizations like norm tweaking for LLM quantization and FlashAttention speeding up Transformer performance. Additionally, it includes conversations on topics such as model compression in LLMs, FlashAttention utilization on modern GPUs, and Triton kernels optimization in PyTorch models. The section also delves into new advancements like Adam Mini optimizer, GrokFast for accelerating Grokking in Transformers, MobileLLM for on-device large language models, and JEST for data curation in training. Each sub-section showcases user queries, discussions, and resolution steps for specific topics within the Unsloth AI and CUDA modes domain.

CUDA Mode Torchao

CUDA MODE ▷ #torchao (2 messages):

- Support for Smooth Quant and AWQ Algorithms: Smooth Quant and AWQ are confirmed to be supported in the current workflow.

- Member posits starting with individual implementations of

to_calibrating_for each algorithm before evaluating a unified approach.

- Member posits starting with individual implementations of

- Implement to_calibrating_ for All Algorithms Individually: Implementation of

to_calibrating_function should be distinct initially for each algorithm.- Later evaluation may result in merging into a single flow, similar to the

quantize_API.

- Later evaluation may result in merging into a single flow, similar to the

LM Studio Discussions

This section covers various discussions happening in the LM Studio Discord channels regarding feature requests, GPU compatibility issues, context overflow bugs, setup advice, model discussions, and hardware-related topics. Users discuss topics like optional assistant role triggers, GPU compatibility on Linux, context overflow policy bugs, running LM Studio behind a proxy, optimization advice for budget rigs, integration possibilities of Whisper with LM Studio, Gemma-2 Flash Attention issues, system prompt handling for non-supporting models, efficient model installation using Ollama and LM Studio, and Salesforce's introduction of xLAM-1B. The section also includes discussions on Rust development, etiquette of asking questions, and the XY problem. Additionally, there are discussions on the ColBERT paper, AI agent survey paper, ImageBind modalities, SBERT design and training, and multi-agent systems in AI.

Perplexity AI and AWS Collaboration

Perplexity AI announced a strategic collaboration with Amazon Web Services (AWS) to bring Perplexity Enterprise Pro to AWS customers through the AWS marketplace. This partnership includes joint events, co-sell engagements, and co-marketing efforts, utilizing Amazon Bedrock for generative AI capabilities. Perplexity Enterprise Pro aims to transform how businesses access and use information through AI-driven search and analytics. This collaboration marks a significant milestone in Perplexity's goal to empower organizations with AI-powered tools while prioritizing efficiency, productivity, security, and control. A link to the announcement was provided for more information.

OpenAI's New Models

Members discussed the possibilities of creating a decentralized mesh network where users can contribute their computation power, facilitated by advancements in bandwidth and compression. Mention of BOINC and crypto projects like Gridcoin were highlighted as examples of incentivizing such decentralized networks with tokens.

A proposal was made for a sharded computing platform that can use various VRAM sizes, rewarding users with tokens for their contributed compute. Optimizing CMOS chip configurations using decentralized compute was mentioned, referencing the decommissioned DHEP@home BOINC project.

Queries were raised about the feasibility of running the GGUF platform on parallel GPUs. Responses indicated that given its nature as a tensor management platform, it is indeed possible.

A report detailed that OpenAI is testing new capabilities in its GPT-4 model, showing skills that rise to human-like reasoning, and is progressing through a tiered system towards AGI. The company explained that the second tier involves 'Reasoners' capable of doctorate-level problem solving, with future tiers moving towards 'Agents' that can take autonomous actions.

Users reported severe lag in Claude AI chats after about 10 responses, making the chat function nearly unusable. Speculations pointed to possible memory leaks or backend issues, contrasting with more stable experiences with GPT-4 models.

OpenInterpreter Updates

The <strong>OpenInterpreter</strong> section provides insights into various channels and discussions happening within the community. In the <strong>O1 channel</strong>, there are discussions around issues related to the LLM-Service flag, remote experiences scripts, and community contributions driving the development. Similarly, in the <strong>general channel</strong>, topics include standards comparison between GPT-4o and Llama3 Local, and directives to post in the channel. Stay updated with the ongoing developments and collaborations within the OpenInterpreter community!

Interconnects News and Discussions

In the recent discussions about interconnects, various topics were covered such as Data Curation, FlashAttention, and LMSYS Chatbot Arena. FlashAttention-3 was noted for boosting H100 GPU efficiency, aiming to improve utilization beyond the current max FLOPs. Additionally, the WizardLM2 system relies on WizardArena for evaluating chatbot models through conversational challenges. Furthermore, members discussed the benefits of paraphrasing in synthetic instruction data, RPO preference tuning questions, and the significance of various parameters in fine-tuning models.

FAQ

Q: What are some recent AI innovations discussed in the article?

A: Recent AI innovations discussed in the article include FlashAttention 3 optimized for H100 GPUs, the release of PaliGemma as a 3B open Vision-Language Model (VLM), and OpenAI's insights on superintelligence levels.

Q: What is the significance of Blackstone's $50B investment in AI infrastructure?

A: Blackstone's $50B investment in AI infrastructure creates market excitement and highlights a significant commitment to the advancement of AI technologies.

Q: What are some notable discussions on Reddit related to AI models?

A: Noteworthy discussions on Reddit include topics such as the performance comparison of open LLMs to closed models, the development of a Llama 3 8B model focusing on response format accuracy, and advancements in open-source AI models like NuminaMath 7B.

Q: What recent partnership was announced between Perplexity AI and Amazon Web Services?

A: Perplexity AI announced a strategic collaboration with Amazon Web Services to bring Perplexity Enterprise Pro to AWS customers through the AWS marketplace, emphasizing joint events, co-sell engagements, and co-marketing efforts.

Q: What advancements were discussed in the discussions related to decentralized mesh networks?

A: Discussions related to decentralized mesh networks touched upon creating incentivized networks with projects like BOINC and Gridcoin, proposing a sharded computing platform for varied VRAM sizes, and optimizing CMOS chip configurations using decentralized compute.

Q: What were the reported capabilities of OpenAI's GPT-4 model in the recent report?

A: In the recent report, OpenAI is testing new capabilities in its GPT-4 model, showcasing skills that rise to human-like reasoning and progressing through a tiered system towards AGI, with the second tier involving 'Reasoners' capable of doctorate-level problem solving.

Q: What key topics were discussed in the HuggingFace Discord channel?

A: Topics discussed in the HuggingFace Discord channel included explanations on triplet collapse in embedding models, transfer learning with pre-trained softmax models to address triplet collapse, and discussions related to Unsloth AI and CUDA modes.

Q: What are some of the hardware and infrastructure advancements covered in the article?

A: The article covers topics like GPU innovations, norm tweaking for LLM quantization, FlashAttention speeding up Transformer performance, Triton kernels optimization in PyTorch models, the Adam Mini optimizer, and MobileLLM for on-device large language models.

Q: What were some of the key points discussed in the CUDA MODE Discord channel?

A: The CUDA MODE Discord channel discussions touched upon the support for Smooth Quant and AWQ algorithms, the implementation of `to_calibrating_` for all algorithms individually, and optimizations in the workflow.

Q: What were the highlights of the OpenInterpreter section mentioned in the article?

A: The OpenInterpreter section highlighted discussions around issues related to the LLM-Service flag, remote experiences scripts, standards comparison between GPT-4o and Llama3 Local, post directives in the general channel, and ongoing developments within the OpenInterpreter community.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!