[AINews] Dia de las Secuelas (StarCoder, The Stack, Dune, SemiAnalysis) • ButtondownTwitterTwitter

Chapters

Technical Updates and AI Twitter Summary

AI Twitter Narrative

Discord Channel Summaries

Discord Channel Summary

Mistral AI Discussions on Server Specs, Model Capabilities, and Specialized Models

LM Studio Hardware Discussion

Chat about LM Studio Features and Models

Recent AI Chat Discussions

Project Obsidian

Discussion on Matryoshka Embeddings in Latent Space

Difference in Model Quality and Release of Cheatsheet

LangChain and LangChain AI Updates

Interconnects

AI Discord Channel Discussions

Technical Updates and AI Twitter Summary

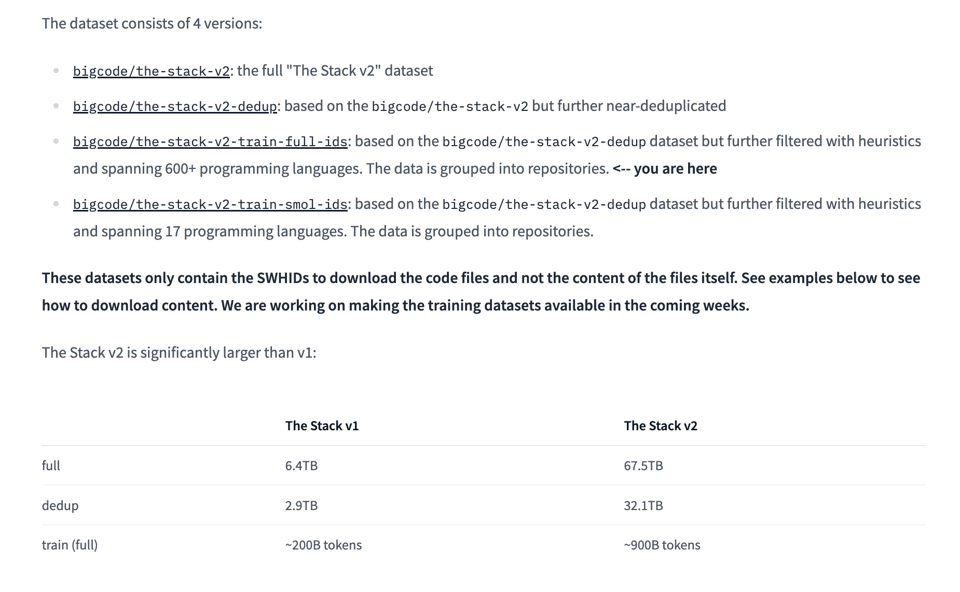

The section focuses on technical updates in the AI field, specifically the release of StarCoder v2 and The Stack v2 models by HuggingFace/BigCode. These models are major advancements with significant parameters and training data. It also mentions the use of Grouped Query Attention and Fill-in-the-Middle objective in the training process. Additionally, there's a note about the removal of the Table of Contents due to user feedback. The AI Twitter Summary portion highlights discussions by prominent figures like François Chollet and Sedielem, addressing various topics in AI and machine learning. It also touches on executive shifts in leadership at companies like $SNOW and provides updates from the technology industry, including news about Gemini 1.5 Pro and Groq chips.

AI Twitter Narrative

The technical discussions on AI and Machine Learning involve reflections on Language Model Mirroring (LLMs) and diffusion distillation by François Chollet and Daniele Grattarola, emphasizing critical thinking and diversified safeguarding of models. Leadership transitions in tech organizations like $SNOW are noted, showcasing admiration for evolving leadership. Memes and humor, like Cristóbal Valenzuela's analogy between airplanes and bicycles, add a lighter perspective. Margaret Mitchell's call for diversity in tech reporting highlights inclusivity importance. AI development's practical considerations, such as backup strategies post-outages by abacaj, reflect operational resilience.

Discord Channel Summaries

This section provides detailed summaries of conversations and events from various dedicated Discord channels related to AI development and advancements. From discussing the latest research papers and model developments to troubleshooting technical issues and sharing resources, each Discord summary offers insights into the dynamic AI community. Topics range from exploring innovative AI model capabilities, representation learning techniques, and improvements in AI hardware, to engaging in conversations about the integration of new tools and technologies within the field. These summaries capture the essence of ongoing discussions and developments within each Discord community, catering to engineers seeking knowledge, updates, and collaboration opportunities in the AI landscape.

Discord Channel Summary

DiscoResearch Discord Summary

-

DiscoLM Template Usage Critical: Proper utilization of the DiscoLM template for chat context tokenization was emphasized by @bjoernp, with reference to the chat templating documentation on Hugging Face.

-

Chunking Code Struggles with llamaindex: @sebastian.bodza faced issues with the llamaindex chunker for code, outputting one-liners despite settings, hinting at a bug or tool adjustments needed.

-

Pushing the Boundaries of German AI: @johannhartmann working on a German RAG model using Deutsche Telekom's data and seeking advice for enhancing the German-speaking Mistral 7b model's reliability, while @philipmay generated negative samples for RAG datasets.

-

German Language Models Battleground: A debate arose over Goliath vs. DiscoLM-120b for German language tasks, with @philipmay and @johannhartmann sharing insights, and @philipmay providing a Goliath model card for further examination.

-

Benchmarking German Prompts and Models: @crispstrobe disclosed EQ-Bench now includes German prompts, with GPT-4-1106-preview model leading in performance, mentioned translation scripts part of benchmarks, effectively translated by ChatGPT-4-turbo.

Mistral AI Discussions on Server Specs, Model Capabilities, and Specialized Models

Discussions in this section cover various topics related to Mistral AI, including scrutinizing an investment by Microsoft in Mistral AI, challenges with Mistral function calls, quantization of Mistral models, and using Mistral-7B for generating complex SQL queries. There are also talks about Mistral deployment requirements, debates on optimal server specifications, limitations of quantized models, and self-hosting AI models. Additionally, the section touches on the potential advantages and challenges of training specialized AI models and the need for substantial VRAM to effectively run non-quantized, full-precision models.

LM Studio Hardware Discussion

Optimization Tips for Windows 11:

.bambalejoadvised users to disable features like core isolation and vm platform on Windows 11 for better performance, and to ensure VirtualizationBasedSecurityStatus is set to 0.

TinyBox Announcement:

senecalouckshared a link with details on the TinyBox from TinyCorp, a new hardware offering found here.

Chat about LM Studio Features and Models

Inquiring about Image Insertion in LM Studio:

- @heoheo5839 was unsure about how to add an image into LM Studio as the 'Assets' bar wasn't visible. @heyitsyorkie explained that to add an image, one must use a model like

PsiPi/liuhaotian_llava-v1.5-13b-GGUF/, ensure both the vision adapter (mmproj) and gguf of the model are downloaded, after which the image can be inserted in the input box for the model to describe.

Questions about llava Model Downloads:

- @hypocritipus queried about the possibility of downloading llava supported models directly within LM Studio, alluding to easier accessibility and functionality.

Clarifying llava Model Functionality in LM Studio:

- @wolfspyre questioned whether downloading llava models is a current functionality, suggesting that it might already be supported within LM Studio.

Confirming Vision Adapter Model Use:

- In response to @wolfspyre, @hypocritipus clarified they hadn't tried to use the functionality themselves and were seeking confirmation on whether it was feasible to download both the vision adapter and the primary model simultaneously within LM Studio.

Exploring One-Click Downloads for Vision-Enabled Models:

- @hypocritipus shared an excerpt from the release notes indicating that users need to download a Vision Adapter and a primary model separately. They expressed curiosity about whether there is a one-click solution within LM Studio to simplify this process, where users could download both necessary files with a single action.

Recent AI Chat Discussions

The recent AI chat discussions on HuggingFace and LAION Discord channels cover a variety of topics. Users are exploring tools like DSPy and Gorilla OpenFunctions v2 for model programming and function calling, collaborating on projects like invoice processing, and seeking communities for AI research. Innovative AI models like BitNet b1.58 and Stable Diffusion Deluxe are being highlighted. Users are sharing projects like Locally running LLM Assistant and providing deployment guides for models. Additionally, issues with text generation inference and discussions on AI-generated art legality are also taking place. Links to repositories, tools, and legal articles related to AI are being shared in these dynamic conversations.

Project Obsidian

- QT Node-X Twitter Updates: QT Node-X's Twitter shared a series of posts QT Node-X Tweet 1, QT Node-X Tweet 2, and QT Node-X Tweet 3 showcasing updates on the project.

Discussion on Matryoshka Embeddings in Latent Space

In the Latent Space discussion, various users engaged in discussions related to Matryoshka Embeddings. There was a mention of Noam Shazeer's blog post on coding style, skepticism on AI news pieces, and insights shared on Lakehouses and data engineering. Additionally, there were discussions on the Matryoshka Embeddings paper club, special presentations, and recommendations for resources like an in-depth guide on table formats and query engines. The session also involved engagement with authors and resources related to Matryoshka Embeddings, including insights on deployment, applications, curious exploration, and implications for transformer models.

Difference in Model Quality and Release of Cheatsheet

Difference in Model Quality: The section highlights differences in response quality between the website and sonar-medium-online model for a voice chat application API.### Launch of Development Cheatsheet: The section announces the release of 'The Foundation Model Development Cheatsheet' by @hailey_schoelkopf. This resource aims to assist new open model developers and champions transparent model development. Focus on dataset documentation and licensing practices is emphasized, and the cheatsheet can be accessed as a PDF paper or an interactive website. Links to further updates are provided in the form of a blog post and Twitter thread.

LangChain and LangChain AI Updates

The section discusses various updates related to LangChain and LangChain AI, including troubleshooting issues with LangServe, inviting users to join LangChain templates on Discord, and sharing information about GenAI Summit San Francisco 2024. Additionally, it covers developments in LangChain tutorials, discussions on LangGraph capabilities, and the introduction of new features like AI Voice Chat app 'Pablo.' The section also includes information about LangChain in the 'share-your-work' channel, where users celebrate book listings and share Discord server invites for projects and feedback solicitation.

Interconnects

In the Interconnects section, various topics were discussed among Discord users. From clarifications by Arthur Mensch about recent announcements to the launch of StarCoder2 and The Stack v2 by BigCodeProject. Additionally, Meta's plan for Llama 3, Nathan Lambert's excitement for G 1.5 Pro, and a podcast featuring Demis Hassabis of Google DeepMind were highlighted. Conversations ranged from discussing completely open AI to coincidental name similarities among users and the search for a suitable 'LAMB' emoji.

AI Discord Channel Discussions

This section provides insights from various Discord channels focusing on AI topics. Users discuss anticipated releases, improvements, interest in specific features, testing challenges, search updates, resource inquiries, model comparisons, dataset usage, and recruitment inquiries. The content covers a wide range of AI-related discussions including model performance, training techniques, language model optimization, and collaboration opportunities.

FAQ

Q: What are the updates in the AI field discussed in the section?

A: The updates in the AI field include the release of StarCoder v2 and The Stack v2 models by HuggingFace/BigCode, advancements with significant parameters and training data, use of Grouped Query Attention and Fill-in-the-Middle objective in training, removal of the Table of Contents, AI Twitter discussions, and leadership shifts in companies like $SNOW.

Q: What technical discussions are highlighted in the text?

A: The technical discussions cover topics such as Language Model Mirroring (LLMs), diffusion distillation, critical thinking in model safeguarding, reflections by François Chollet and Daniele Grattarola, operational resilience strategies, and Diversity in tech reporting by Margaret Mitchell.

Q: What are the key points discussed in the Discord summaries related to AI development?

A: The Discord summaries cover discussions on Mistral AI, Mistral-7B model usage for SQL queries, tools like DSPy and Gorilla OpenFunctions v2, project collaborations, issues with text generation inference, and debates on AI-generated art legality.

Q: What is the purpose of 'The Foundation Model Development Cheatsheet' released by @hailey_schoelkopf?

A: 'The Foundation Model Development Cheatsheet' aims to assist new open model developers, promote transparent model development, emphasize dataset documentation and licensing practices, and provide resources in the form of a PDF paper or interactive website.

Q: What are the highlights of the updates related to LangChain and LangChain AI?

A: Updates related to LangChain and LangChain AI include troubleshooting LangServe issues, LangChain templates on Discord, information about GenAI Summit San Francisco 2024, developments in LangChain tutorials, discussions on LangGraph capabilities, and the introduction of new features like the AI Voice Chat app 'Pablo.'

Q: What specific topics are discussed in the Interconnects section of the text?

A: The specific topics discussed in the Interconnects section include recent announcements by Arthur Mensch, launch of StarCoder2 and The Stack v2 by BigCodeProject, Meta's plan for Llama 3, excitement for G 1.5 Pro, a podcast featuring Demis Hassabis of Google DeepMind, discussions on completely open AI, and the search for a suitable 'LAMB' emoji.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!