[AINews] DeepMind SIMA: one AI, 9 games, 600 tasks, vision+language ONLY • ButtondownTwitterTwitter

Chapters

DeepMind SIMA: Generalist AI Agent for 3D Virtual Environments

Model Weight Security Concerns

Alignment Lab AI

AI Engineer Foundation Discord

Labs

Use Cases and Discussions at Perplexity AI

Unsloth AI and Nvidia Projects Discussion

Deep Learning and Model Performance Discussions

Challenges and Explorations in AI Discussions

Deep Dives into OpenAccess AI Collective and LangChain AI

OpenRouter Announcements and Releases

CUDA MODE Related Conversations

AI News Update

DeepMind SIMA: Generalist AI Agent for 3D Virtual Environments

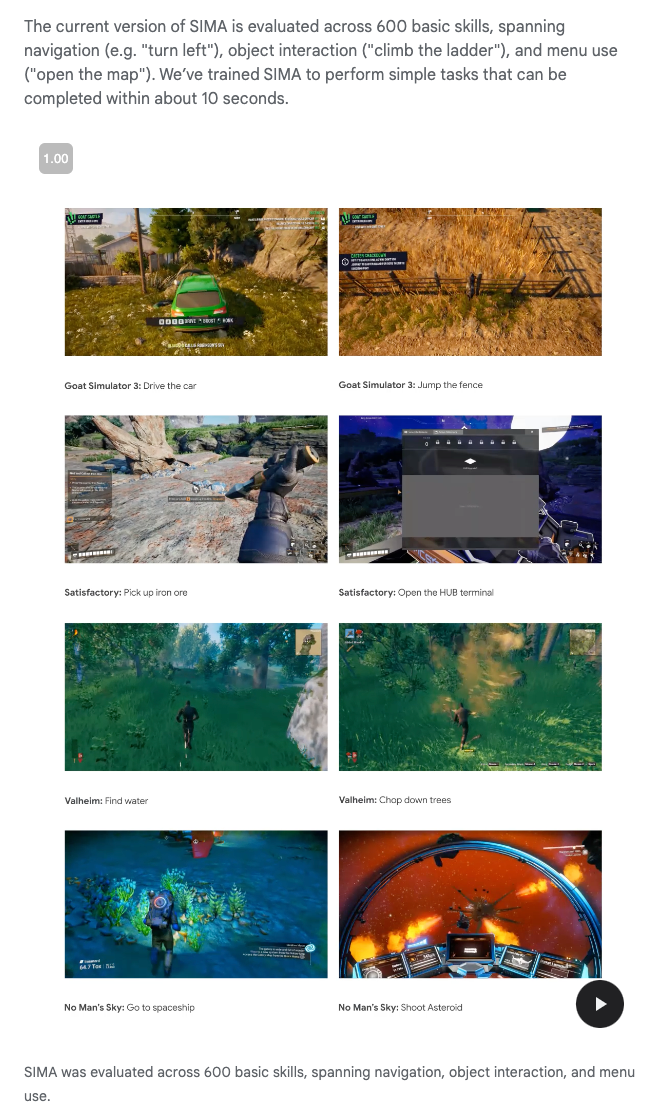

The DeepMind SIMA project involves developing a generalist AI agent that can perform tasks in 9 different games using only screengrabs and natural language instructions. The technical report includes details on the multimodal Transformer utilized and the challenging nature of the 600 tasks. Additionally, the section covers a summary of discussions on AI Twitter, focusing on topics such as AI autonomy in software engineering, large language models, AI agents and demos, AI infrastructure and training, as well as memes and humor in the AI community. The 'Summary of Summaries' section highlights recent releases and advancements in AI models, hardware, benchmarks, frameworks, and tools.

Model Weight Security Concerns

Researchers have raised concerns about the security of model weights, particularly in models like ChatGPT and PaLM-2. The possibility of inferring weights from APIs has sparked debates on AI ethics and the need for future protection measures. Detailed information on the implications and risks of model weight extraction can be found in a recent research paper.

Alignment Lab AI

Join the Visionaries of Multimodal Interpretability:

Soniajoseph_ calls for collaborators on an open-source project aimed at interpretability of multimodal models. This initiative is detailed in a LessWrong post and further discussions can be had on their dedicated Discord server.

In Search of Lightning-Fast Inference:

A guild member seeks the fastest inference method for Phi 2, mentioning the use of an A100 GPU. They're looking into batching for

AI Engineer Foundation Discord

- Plugin Authorization Made Easy: The AI Engineer Foundation suggested implementing config options for plugins to streamline authorization by passing tokens, informed by the structured schema in the Config Options RFC.

- Brainstorming Innovative Projects: Members were invited to propose new projects, adhering to a set of criteria available in the Google Doc guideline, along with a tease for a possible partnership with Microsoft on a prompt file project.

- Meet Devin, the Code Whiz: Cognition Labs unveiled Devin, an AI software engineer heralded for exceptional performance on the SWE-bench coding benchmark, ready to synergize with developer tools in a controlled environment, as detailed in their blog post.

Labs

Labs

Introduced Devin, an autonomous AI software engineer who excelled at passing engineering interviews and resolving 13.86% of GitHub issues, surpassing SWE-Bench benchmark. Devin's capabilities and achievements can be explored further here.

-

A new paper sparked discussions on inferring weights from language models like ChatGPT and PaLM-2 using their APIs, leading to ethical debates and concerns for future security measures. More on this topic can be found here.

-

Users expressed dissatisfaction with Google's Gemini API due to its complexity and documentation, suggesting Google is lagging in the AI API space compared to competitors.

-

Together.ai raised $106M to develop a platform for running generative AI apps at scale, introducing Sequoia, a method for efficiently serving large LLMs. More about their vision can be found here.

-

Cerebras unveiled CS-3, the fastest AI chip capable of training models with up to 24 trillion parameters on a single device, marking a significant advancement in AI hardware innovation. Discover more about CS-3 here.

Use Cases and Discussions at Perplexity AI

Eugene Yan discussed various aspects of model training, emphasizing the efficiency and cost-effectiveness of synthetic data. Links were shared to explore topics like voice recognition and the potential of Whisper for speech processing. Community engagement was encouraged for paper coverage. Perplexity AI discussions ranged from concerns about plagiarism detection tools, search engine debates, productivity boosts with AI tools, to confusion over Perplexity AI offerings. Members shared their experiences and concerns, such as accuracy anxieties and sharing direct links to Perplexity AI search results. In the Unsloth AI channel, discussions surrounded kernel improvements, unsloth performance, future updates, and technical support related to AI models.

Unsloth AI and Nvidia Projects Discussion

This section provides insights into the latest updates and discussions related to Unsloth AI projects and Nvidia collaborations. The conversation covers topics such as Mistral support, GitHub repositories for QLoRA finetuning, Docker setups, and speed testing scripts for fine-tuning models. Additionally, discussions on combating dependencies, slow task progress, and model switch solutions are highlighted. The section also delves into improving GPU configurations for Large Language Models (LLMs), suggestions for utilizing NVMe SSDs in model fine-tuning, and the advantages of state-of-the-art language models. Throughout the discussions, community members share helpful links to research papers, GitHub projects, and video resources to enhance knowledge and collaboration within the AI community.

Deep Learning and Model Performance Discussions

The discussions in this section revolve around various topics related to deep learning, model performance, and different AI models. In one exchange, users speculate on bypassing VRAM requirements on macOS and discuss the benefits of upgrading RAM. Another conversation explores running multiple instances of LM Studio and the tweaks needed in GPU pairing. Additionally, users share insights on improving GPU utilization with AMD drivers and discuss troubleshooting methods for ROCm installations. The section also covers discussions on using GPT models for game instructions, exploring Sora's capabilities, and handling large datasets with GPT Chat. Furthermore, members share opinions on the rumored GPT-4.5 model and inquire about self-hosting AI models. Lastly, the section touches on ethical concerns in model hacking, LoRA pre-training, and the capabilities of different language models such as Claude 3 for paper summaries.

Challenges and Explorations in AI Discussions

The discussions in this section cover a variety of topics related to AI, including considerations on tracking upstream Megatron for better integration with Transformer Engine, lobbying dynamics, speculation on AI's role in potential calamities, skepticism around AI-driven governance, concerns about copyright and AI regulation, and interest in AI hardware and software for large-scale inference tasks. Additionally, the section explores topics like mitigating hallucination issues in data generation, effectiveness of cross attention mechanisms, anticipation for the implementation of MoAI, recognition of contributed datasets, and misconceptions around memory optimization for a 30B model. Links provided lead to resources discussing regulatory frameworks for AI, development of new AI models, and advancements in AI infrastructure.

Deep Dives into OpenAccess AI Collective and LangChain AI

In this section, various discussions within the Open Access AI Collective and LangChain AI communities are highlighted. The conversations cover topics such as LLm papers database creation, support queries for Axolotl, open-source model releases, AI infrastructure expansion, chatbot frameworks, and framework updates like Fuyou and DoRA support. Additionally, the LangChain AI community discusses switching chatbot modes, custom LLM usage, and the need for up-to-date documentation. Furthermore, recent releases like the ReAct agent, LangChain Chatbot becoming open source, and the MindGuide chatbot for mental health support are showcased.

OpenRouter Announcements and Releases

- Database Update Briefly Impacts OpenRouter: OpenRouter experienced a temporary issue with activity row availability due to a database update, lasting around three minutes.

- Claude 3 Haiku Arrives: Claude 3 Haiku, the latest model from Anthropic, is fast and affordable, offering speeds of 120 tokens per second at a cost of 4M prompt tokens per $1.

- Claude 3 Haiku: Fast, Affordable, Multimodal: Claude 3 Haiku is highlighted for its quick responsiveness and affordability, being self-moderated to ensure efficiency.

- Olympia.chat Leveraging OpenRouter: Olympia.chat introduced a platform utilizing ChatGPT, powered by OpenRouter, aiming at solopreneurs and small business owners.

- A Friend's Messenger Chatbot Ready for Testing: Information was shared about a chatbot created for messenger applications, extending testing invitations.

CUDA MODE Related Conversations

This section includes discussions related to CUDA expertise, CUDA code executions, Nsight Compute GPU compatibility concerns, Ubuntu CUDA Toolkit issues, as well as queries about CUDA cores, thread execution, and architecture. Additionally, there are conversations about training challenges, patching Axolotl repository, and performance reports comparing different variants. The section also covers mentions of a fully autonomous AI software engineer named Devin, frustrations with Twitter user experiences, GPT-4 playing classic games, and a creative writing benchmark prototype. Lastly, it includes inquiries on German embeddings, experimentation with Mixtral models, and the search for the best embedding solutions and benchmarks for the German language.

AI News Update

The latest AI news update includes discussions on seeking advice for long-context chatbots, AI tackling PopCap classic games, exploring advanced generative models, seeking collaborators for open source interpretability on multimodal models, and discussing fast method for performing inference with Phi 2. Additionally, the AI Engineer Foundation meeting covered topics such as plugin config options for authorization, suggesting new project ideas, and introducing Devin, the AI software engineer with capabilities in autonomous software engineering.

FAQ

Q: What is the DeepMind SIMA project about?

A: The DeepMind SIMA project involves developing a generalist AI agent that can perform tasks in 9 different games using only screengrabs and natural language instructions.

Q: What are some key details mentioned in the technical report of the SIMA project?

A: The technical report includes details on the multimodal Transformer utilized and the challenging nature of the 600 tasks.

Q: What are some of the key topics discussed in the 'Summary of Summaries' section of the report?

A: The 'Summary of Summaries' section highlights recent releases and advancements in AI models, hardware, benchmarks, frameworks, and tools.

Q: What are the concerns raised by researchers regarding the security of model weights in AI models like ChatGPT and PaLM-2?

A: Researchers have raised concerns about the security of model weights, particularly when it comes to models like ChatGPT and PaLM-2. The possibility of inferring weights from APIs has sparked debates on AI ethics and the need for future protection measures.

Q: What open-source project related to interpretability of multimodal models is detailed in a LessWrong post?

A: The open-source project related to interpretability of multimodal models is detailed in a LessWrong post by Soniajoseph_.

Q: What advancements were made in AI hardware innovation by Cerebras with the introduction of CS-3?

A: Cerebras unveiled CS-3, the fastest AI chip capable of training models with up to 24 trillion parameters on a single device, marking a significant advancement in AI hardware innovation.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!